Začněte stručným dvoufázovým pilotním projektem pro ověření proveditelnosti technologie distribuované účetní knihy v rámci proveničních sítí; fáze jedna cílí na 6–8 dodavatelů, 2 výrobce, 1 maloobchodníka, 1 logistického partnera; fáze dvě se rozšiřuje na 15–20 účastníků. Sledujte kvalitu dat, latenci událostí, časy odezvy na stažení z oběhu; použijte bezpečný nástroj pro výměnu dat se standardními rozhraními, abyste minimalizovali změny ve stávajících systémech. Tento konkrétní plán omezuje riziko; ukazuje měřitelné zisky v rychlosti, transparentnosti a integritě.

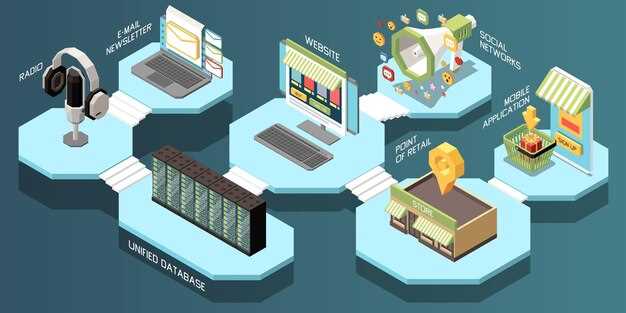

Praktický applications zahrnuje viditelnost původu; onboarding dodavatelů; sledovatelnost šarží; připravenost na stažení z oběhu. monitoring kvalita dat přináší využitelné poznatky. A secure exchange nástroj je v souladu s existujícím platforms; toto umožňuje komunikace přes companies; staff dokáže reagovat rychleji. infrastructure scales from hraničních uzlů na cloudové servery; meyliana reference ukazuje, jak se známé ekosystémy chovají v reálných podmínkách. google-style podpora analytiky evaluation výkonu, zejména během volatility. Vztahy among companies, platforms, suppliers; zabezpečené vztahy.

Zjištění proveditelnosti utvářejí infrastructure investice; postupné zavádění přináší rychlé úspěchy. A rychlý zpětnovazební smyčka spoléhá na nenáročný feature sada; robustní monitoring poskytuje průběžné evaluation. Školení zaměstnanců je důležité pro bezpečné pracovní postupy; jasná dokumentace podporuje spolupráci napříč firmou. komunikace. Vztahy se známým platforms, suppliers; customers posílit odolnost.

Směrnice pro studii zahrnují standardy interoperability, modelování rizik, sladění s předpisy; výsledky pilotního provozu publikují metriky návratnosti investic, jako je zkrácení doby vyvolání, zlepšení přesnosti dat. google Řídicí panely poskytují průběžné evaluation nákladové efektivnosti; stejný přístup lze škálovat napříč platforms; různorodé trhy. Modulární infrastructure podporuje rychlou expanzi od regionálních sítí ke globálním ekosystémům; odlehčený ahoj protokol zajišťuje spolehlivé výměny dat. meylianascénáře ve stylu -style ilustrují praktické třenice; školení zaměstnanců, relationships řízení napříč známými účastníky nabírá na síle.

Implementace Provenience a Integrity Dat v Reálném Čase v Track-and-Trace Workflow

Doporučení: Nasaďte hybridní engine původu dat, který váže každý datový bod při zachycení k hashovacímu kódu odolnému proti neoprávněné manipulaci; implementujte kontroly integrity dat v reálném čase pro každou událost; publikujte auditovatelné souhrny na veřejné panely; tento návrh snižuje nedostatek viditelnosti, zvyšuje propustnost; přináší čistší důvěru mezi odběrateli, platformami, zprostředkovateli; produkované datové body zaznamenané u zdroje jsou sledovatelné až k jejich původu; Shen, Khan, Kaldoudi, Cole uvádějí pozitivní dopad v pilotních projektech; transformované rizikové prostředí zůstává důvěryhodné; orgány veřejné správy přezkoumávají výsledky.

Implementační plán

V praxi začněte snímáním okrajů v zařízeních manipulujících s potravinami; ke každému záznamu připojte kryptografickou pečeť; synchronizujte čas se spolehlivým zdrojem času; každý záznam nese otisk zdroje; zkoumáno automatizovanými validátory na bránových uzlech; pokud dojde k neshodě, upozornění v reálném čase zastaví navazující prodej, dokud se ověření nedokončí.

Sekce v rámci platformy odpovídají fázím: příjem; transformace; balení; distribuce; každá sekce ukládá lokální hashe; předává kompaktní ukazatel do veřejné repliky knihy; kupující se přihlašují k odběru kanálů pro položky zájmu; platformy nabízejí filtry podle typu produktu (potraviny; móda), šarže, předmětu; bezpečnostní kontroly omezené na personál s ověřenými rolemi; ovladače zahrnují nástroje pro rozhodování umožňující stažení z oběhu.

Mapování případů použití zahrnuje sektory: potravinářství; farmacie pro pacienty; móda; každý sektor používá shodnou disciplínu původu; pro farmacii platí další omezení chránící pacienty; audity ověřují původ napříč dodavateli; zprostředkovateli; veřejné rizikové signály spouštějí upozornění.

Úvahy o návrhu

Bezpečnostní politika se opírá o granulární řízení přístupu; řízení rizik je v souladu s regulačními požadavky; využíváme tři vrstvy: validace zdroje; validátory u zprostředkovatelů; veřejné validátory; snížení expozice prostřednictvím oddělení povinností; propustnost je zachována i během stahování dat; řídicí panel rizik porovnává produkovaná data s očekávanými hodnotami; to posiluje důvěru veřejnosti; kupující se při rozhodování spoléhají na tyto signály.

Závěr: Původ a integrita dat v reálném čase se stávají dosažitelnými díky pragmatickému enginu; ověření zdroje; veřejné panely; studie Khan, Shen ukazují nižší riziko záměn; Kaldoudi poznamenává zlepšené rozhodování v případech stahování z trhu; kupující získávají čistší přehled; platforma se rozšiřuje napříč produkty, jako jsou potraviny, móda, péče o pacienty; měřená propustnost zůstává vysoká i přes přidané kontroly; signály živosti podporují regulační přezkumy.

Smart Contract Playbooks pro sladění inventury a automatické doplňování zásob

Doporučení: implementujte modulární playbook; rekonciliační procesy propojené s automatickým doplňováním prostřednictvím tokenizovaných událostí; data uložená v distribuovaných uzlech; neměnné záznamy zajišťují sledovatelnost; odpovědnost je přímočará. Každý token má svůj účel: identifikátory šarží; sériová čísla na úrovni položek; podrobnosti o umístění; energetické metriky; indikátory latence; podrobnosti o celém životním cyklu zachycené v reálném čase. Přední organizace by pilotně spolupracovaly se společnostmi Everledger, Walmart, Airbus; toky financování spojené se stavem zásob zlepšují cykly provozního kapitálu. Zlatým pravidlem zůstává interorganizační viditelnost; zachování soukromí; lidé v různých rolích mají přístup k bezpečné a auditovatelné účetní knize prostřednictvím poskytování založeného na rolích. Nárůst transparentnosti zvyšuje důvěru zúčastněných stran; datové proudy Bogucharskov dodávají podrobnosti o položkách; zásilky sledované od dodavatele přes příjemce až po finální fázi hotových výrobků. Bezpečnostní hlediska snižují zranitelnost; tento přístup se škáluje na ekosystémy na úrovni poskytovatele s automatizací, monitorováním a integrací financování. Architektura umožňuje partnerům integrovat moduly ERP, WMS a financování.

Architektura Playbooku

Architektura zahrnuje tři komponentní vrstvy: modul pro odsouhlasení; automatický engine pro doplňování zásob; auditní a reportovací vrstva. Tokeny reprezentují otisk každé položky; účel každého tokenu je definován; tokeny jsou uloženy v neměnných databázích na různých uzlech; integrace mezi organizacemi je podporována zabezpečenými API. Model zpracovává pracovní postupy mezi organizacemi; cíle latence překračují 200 ms; latence nad 250 ms spouští záložní cesty. Je veden zlatý záznam pro každou zásilku; datové toky Bogucharskov přivádějí podrobnosti o položkách; růst transparentnosti snižuje rizika pro walmart, everledger, airbus; metriky financování spojené s úrovněmi zásob v reálném čase zlepšují peněžní tok. Každý modul má jasně definované vlastnictví týmy poskytovatelů; lidé z logistiky, financí a kvality se účastní bez duplicitních záznamů. Snadné zapojení zajišťují šablonované smlouvy, což snižuje úsilí na míru; komponenty jsou v souladu s pravidly ochrany osobních údajů; bezpečnostní protokoly chrání citlivé payloady; skenování zranitelností probíhá před každou událostí odeslání. Systém integruje ERP; WMS; moduly financování.

Pokyny k implementaci

Kroky implementace: pilotní provoz v rámci středně velké sítě dodavatelů; zmapování aktuálních pracovních postupů; definování taxonomie tokenů; konfigurace pravidel odsouhlasení; propojení ERP; propojení WMS; propojení finančních modulů; implementace přístupu na základě rolí; spuštění testů latence; zveřejnění panelů pro sledování výkonnosti. Upřednostňujte odolnost; šifrujte citlivá data; udržujte zlaté vzorky pro referenci; sledujte spotřebu energie; slaďte finanční cykly; validujte pomocí reálných dodávek; monitorujte rizika; definujte nouzové postupy. Mezioborová spolupráce zdokumentována; případové studie Bogučarskov poskytují poučení; snadné příručky pro onboarding podporují lidi; ověřte si partnerstvími s Everledger, Walmart, Airbus.

Integrace ERP a starších systémů: API, middleware a mapování dat v praxi

Doporučení: přijměte strategii API-first; implementujte orchestraci middleware; mapujte data do kanonického modelu; zvyšte sledovatelnost pro odborníky napříč zeměmi; umožněte přenos dat na vyžádání; snižte vzniklé chyby; zlepšete přehled; slaďte se s kontextem distribučních sítí; podporuje prodej elektroniky, produktů napříč stejnými modely; celý životní cyklus od skladu po distribuční park; senzory poskytují stav přepravy přepravovaného zboží; tento rámec tvoří původní základ pro přeshraniční spolupráci; dutta; parssinen zdůrazňují praktická témata pro migraci; moudrá správa posiluje kontrolu rizik.

Datová architektura a mapování

- Kanonický datový model: definujte pole jako product_id, product_name, category, quantity, unit_of_measure, location, lot_or_serial, status, timestamp; mapujte starší schémata do schématu ERP pomocí mapovacích slovníků; zahrňte modely do zdroje a cíle; zajistěte sledovatelnost pro navazující analýzy.

- Mapování legacy dat do ERP: vytvořte příslušná pravidla pro překlad názvů polí; normalizaci typů; harmonizaci referenčních dat; zachovejte původní hodnoty pro auditní stopy; uložte výsledky mapování do vyhrazené databáze; udržujte původ dat.

- Správa kmenových dat: vytvořte zlaté záznamy pro produkty, lokality, dodavatele; provádějte deduplikaci napříč zeměmi; podporujte stejnou taxonomii v různých kontextech; implementujte správu verzí pro zohlednění vzniku nových SKU; zajistěte kontroly kvality dat.

- Senzory a telemetrie: integrace skladových senzorů, sledování přepravy; přenos stavu do ERP v téměř reálném čase; překlad signálů senzorů do stavu přepravy, jako je doručeno, naloženo, odesláno; řešení latence dat, aby se zabránilo nekonzistentním počtům zásob.

- Zabezpečení a přístup: vynucujte oprávnění na základě rolí; šifrujte citlivá pole; implementujte autentizaci pomocí tokenů; slaďte s regulačními omezeními na příslušných trzích.

Operační příručka

- API kontrakty: REST nebo GraphQL; OpenAPI dokumentace; striktní verzování; testovací sady; kontraktové testování pro prevenci zásadních změn; což snižuje narušení navazujících systémů.

- Orchestrace middleware: zvolte centrálního orchestrátora versus choreografické vzory; navrhněte idempotentní koncové body; použijte zprávy pro spolehlivost; sledujte stav zpráv v trvalém úložišti.

- Plán nasazení: postupné zavádění v pilotních lokalitách; sledování vyspělosti; škálování napříč distribučními lokalitami; alokace rozpočtu; zdrojů; sledování vzniklých nákladů; návratnost investic.

- Metriky a přehledy: definujte klíčové ukazatele výkonnosti (KPI) jako latenci dat; míru chybovosti; dobu cyklu; úplnost dat; poskytujte panely na vyžádání; vytvářejte praktické poznatky pro produktové manažery a logistické týmy.

- Partnerství a kontext: navázat spolupráci s logistickými dodavateli, obchodními jednotkami; zřídit řídící fórum; sdílet požadavky v rámci tématu celkového distribučního toku; zajistit sladění napříč zeměmi.

Škálování blockchainu pro globální logistiku: Propustnost, latence a off-chain řešení

Doporučení: implementujte třívrstvou architekturu s hlavním stavem účetní knihy; kanály mimo účetní knihu; periodické vytváření kontrolních bodů do kořene; zvolte Hyperledger Fabric nebo Hyperledger Besu; nakonfigurujte dle požadavků; povolte auditování napříč primárními trasami; slaďte s regulačními standardy; začněte s pilotními projekty Toyoda společně s výrobními partnery; počáteční materiálové toky pro ověření proveditelnosti; zkombinujte data z vozidel, dodavatelů; dopravců; postupujte tempem.

Odůvodnění: Pro škálování pro globální cla překročte validaci jediného uzlu; využijte kombinovanou propustnost z off-ledger kanálů; použijte stavové kanály pro dávkové zúčtování; sidechainy umožňují experimentování bez dopadu na hlavní knihu; latence klesají pod 2 sekundy v rámci regionu; přeshraniční zúčtování probíhá za 10–20 sekund s agregovanými důkazy; zlepšení kvality dat pro provenience; implementujte bezpečný datový model v souladu s regulatorními požadavky; přijměte standardizovaná digitální dvojčata pro tok materiálu; aplikujte propozice pro auditování nároků; urychlete přijetí v odvětvích, jako je výroba, zdravotnictví, doprava; dobře definovaná správa podporuje důvěru.

Implementation milestones

| Layer | Role | Cílová propustnost (TPS) | Cílová latence (s) | Off-ledger mechanismus | Zabezpečení |

|---|---|---|---|---|---|

| Stav hlavního účetnictví | Autoritativní záznam | 1 000–3 000 | 0,5–2 | Translation not available or invalid. | Kryptografické hashe připravené pro audit |

| Off-ledger kanály | Rychlé aktualizace | 3 000–10 000 | 0,1–0,5 | Stavové kanály, dávkové vypořádání | Ukotveno do klíčového stavu, periodická notářská ověření |

| Kořenový kontrolní bod | Důvěryhodný kořen v různých doménách | 500–2 000 | 1–3 | Root commits | Silné PKI, důkazy ukotvené v aktivech |

Klíčové teze

stručné poznámky: cílem je zlatý standard v tocích důvěry; škálovatelné softwarové volby zahrnují implementace hyperledger; první kroky zahrnují spolupráci Toyoda s výrobními divizemi; sladění s regulacemi zůstává klíčové; sektor zdravotnictví získává z personalizovaného původu materiálu; dohody mezi dopravci, výrobci; prozkoumané cesty vedou k rychlejšímu uvedení na trh; zdroje dokumentují pokrok; tempo odpovídá regulačním cyklům; iniciativy podporované Kennedym řeší výzvy v oblasti regulace, soukromí, přeshraničního tření; zlaté záznamy podporují auditovatelnost; pracovní vztahy s partnery urychlují nasazení.

Soukromí, bezpečnost a soulad s předpisy v přeshraničních transakcích dodavatelského řetězce

Zavádějte standardně bezpečnostní opatření na ochranu soukromí založená na riziku; nasazujte end-to-end šifrování, robustní autentizaci; prosazujte přísné auditní kontroly. Tento postoj přináší největší nárůst důvěry; partneři zahrnují dopravce, celní úřady, dodavatele fungující podle společných pravidel.

Pod povrchem mechanismy sledovatelnosti poskytují podrobnosti o původu dat přes hranice; privacy-by-design, minimalizace dat; omezení účelu v souladu se schválenými architekturami, zajišťující spravedlivé nakládání s daty, umožňující růst; týmy analyzují rizikové signály.

Detekce anomálií závisí na nepřetržitém monitorování shromážděných telemetrických dat; auditní stopy vytvářejí důkladný provozní záznam; zde se rizikové signály stávají akceschopnými; jistá kontejnmentace je možná; následuje zvýšená odolnost.

Provozní bezpečnostní opatření

Výzkumné reference: pankowska; juma; papírové poznámky z nanosci adresy energie; efektivní kryptografie; interchain architektury umožňují lepší prosazování politik; bezkonkurenční ochrana; objevují se moudrá doporučení; yong výzkumníci poskytují kontext, vhledy.

Kontext správy a dodržování předpisů

Úřad stanovuje základní požadavky; podrobnosti implementace zahrnují rozsah, školení a také změny pracovních postupů; zde schválení vydává úřad; v době, kdy postupuje rozvoj talentů, modely rizika zrají; to by mohlo snížit únik; zůstávají zavedeny vhodné kontroly k zachování soukromí.

Blockchain in Supply Chain Operations – Applications, Challenges, and Research Opportunities">

Blockchain in Supply Chain Operations – Applications, Challenges, and Research Opportunities">