Start with a practical setup audit: map every device, its data, and who touches it. This topic reveals added complexity across several systémy and helps you decide what to change now. When you identify whether devices include phones, sensors, or machines, you can plan capabilities and security with confidence.

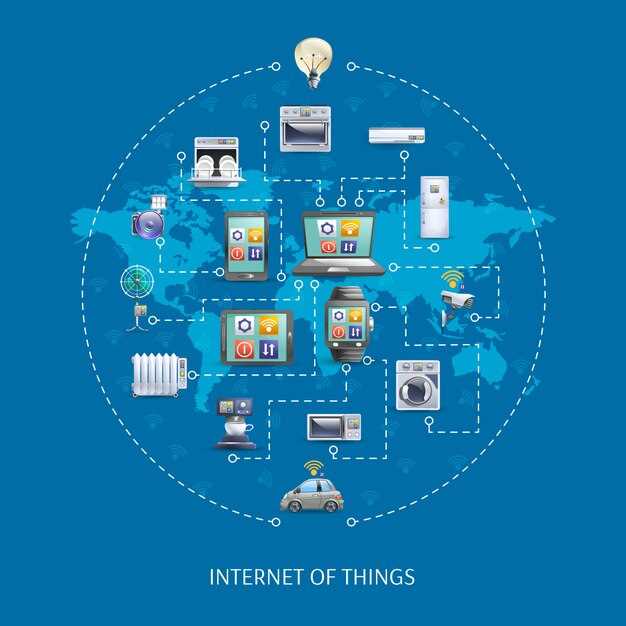

IoT did not spring up yesterday. The seeds started with simple sensors and remote meters in the 1980s. Wireless networks and cloud services then added connectivity. The term Internet of Things gained traction in the late 1990s, and since then adding sensors and actuators across factories, homes, and wearables created a multilayered ecosystem with several levels of complexity. This evolution included devices like phones alongside dedicated sensors, pushing vendors to offer řešení for both standalone devices and broader deployments.

Shape resilience with a multilayered security plan across device, network, and cloud levels. A practical minimal setup includes secure boot, signed updates, and two-factor authentication for admin access. You cant rely on firmware alone; combine automatic vulnerability scanning with strict access controls, encrypted data in transit, and segmented networks to limit blast radius. The plan is requiring ongoing updates and being prepared to adapt as threats evolve.

Looking forward, establish repeatable onboarding for new devices by using standard interfaces and verified update paths. Choose scalable architectures with modular, interoperable řešení, and set a clear timeline for adding devices across offices or facilities. Track metrics such as time-to-onboard, mean time to patch, and data latency to justify investments, and plan adding new phones or sensors in parallel teams without disruption. If you’re unsure, don’t wait to start with a small, focused pilot.

From early applications to integrated ecosystems: tracing the IoT evolution

Begin with a concrete recommendation: inventory every device and mark their roles as nodes, then select a single architectural moniker and standard data definitions to guide integration.

Analogy helps link earlier deployments to today’s ecosystems. In the 2000s, simple sensors in appliances or wearable devices gathered data that moved to a hub via a gateway, forming an early architecture that rewarded modularity and local processing.

That power comes from the ability to extend life of devices through smart over-the-air updates and to open the door to new services without disruptive rewrites.

As ecosystems matured, platforms offered specific interfaces, creating exclusivity but also driving interoperability when supported by open standards. Above all, stakeholders tended to favor scalable architectures that connect vehicles, wearable sensors, and industrial devices through common protocols.

Definitions shifted as networks moved from isolated machines to coordinated, distributed systems. Earlier models emphasized raw data collection, while modern stacks emphasize edge computing, secure communication, and interpretation of signals across devices.

Optimistic forecasts point to tighter privacy, faster response times, and better power budgets at the edge. Perhaps organizations should pilot cross-domain use cases–healthcare, manufacturing, and mobility–using a shared ontology to reduce fragmentation, a step that takes us toward more coherent ecosystems.

The story hinges on a clear interpretation of roles: devices become capable agents, platforms provide access points, and developers can reuse components across life cycles rather than rebuild from scratch. Said by industry leaders who push for interoperability, the message is that a credible architecture reduces lock-in while supporting innovation.

In practice, teams should map governance: who owns data, how devices are updated, and how privacy is protected; this approach centers on a practical definition of processes and a minimal viable ecosystem that can scale across sectors.

With a forward-looking view, the IoT path moves from isolated devices to integrated ecosystems that coordinate through common standards, enabling new value streams at a lower cost per node.

How did early M2M connections function with limited bandwidth and power?

Report only on meaningful changes: set a data-driven threshold to transmit when values cross a small delta, pack data into compact binary frames, and provisionally store data locally when the channel is down. This care for energy and life yields fewer, more valuable messages traveling across limited bandwidth.

These design choices reflect the constraints of early networks. A single meter or sensor often had limited link availability, sharing a scarce air interface such as SMS or a narrow RF channel. Conditions like weak signal, power limits, and tight duty cycles forced engineers to keep operations simple, reliable, and predictable, creating a working foundation where data could be delivered even when life hung on a sparing connection. Local buffering and retry logic allowed devices involved in a network to remain useful without constant contact.

Data payloads stayed small: 40–160 bytes per message were common, with 0–4 readings per transmission and basic CRC for error detection. Binary encoding replaced ASCII to shrink size; delta encoding cut repetition in time series. Each message included a time stamp, a single device identifier, and a simple level indicator. For a single device involved in a network, reliability over latency became the criterion; a batch that arrives once every few minutes often suffices for meter reading or status checks, which keeps the activity level minimal and predictable.

Power-saving relied on duty cycling: radios slept between bursts and microcontrollers paused between tasks. Average current stayed in the milliamp range; wakeups occurred only for transmissions. In many deployments, a battery pack of a few ampere-hours could last multiple years; for example, a household meter transmitting once per hour used roughly 1–5 mA average, depending on radio technology, duty cycle, and message size. Provisionally, devices used simple mains or solar backup to handle long outages, maintaining a consistent data-driven life. These patterns become an evolutionary baseline for modern LPWANs.

Early networks favored simple protocols: one-channel, small frames, and minimal handshakes. The rising need for integrated management gave rise to status reporting with a single message per event. A sound approach used by many vendors included ack/nack and retry within a fixed window; if a message failed, a later attempt would occur when signal conditions improved. This strategy keeps devices involved but not overburdened, protecting battery life while still supporting data-driven operations.

In a broader sense, these constraints created devices that become embedded in daily life with little human oversight. They were provisioned provisionally and integrated into larger, supported systems, often with the ability to reconfigure remotely in a safe, offline-first manner. For personal or commercial uses, that approach reduced maintenance overhead while ensuring critical data reaches the control center under challenging conditions.

Below are practical takeaways for practitioners today: adopt a data-driven mindset, keep messages small, align transmission frequency to the application’s life requirements, and test under hidden conditions such as motion or interference. The evolutionary arc shows how single, simple transmissions become part of an integrated, resilient architecture that supports lives and care with minimal energy. The question remains: which combination of threshold, encoding, and sleep strategy best fits your use case? The answer lies in balancing reliability, latency, and power within the provided constraints.

What standards and protocols enable cross-vendor interoperability?

Adopt Matter as the anchor for cross-vendor interoperability and pair it with MQTT for data streams and CoAP for constrained devices. Use TLS for secure onboarding and mutual authentication to reduce cost and risk. With gateways and standardized device profiles, you enable automated, plug‑and‑play setups that cut manual configuration. This prevents a breed of devices that speak only their own dialect and keeps the market moving.

Key standards and protocols enable interoperability: Matter defines a universal application layer for IP devices; Thread provides a low-power IPv6 mesh; MQTT and CoAP handle data transport for diverse ecosystems. Under budget pressure and limited hardware, teams rely on light-weight implementations and robust certification to avoid fragmentation. Zigbee and Z-Wave can bridge to Matter through gateways, while TLS secures enrollment and firmware updates over the air.

Early deployments benefited from automated enrollment, standardized device profiles, and a focus on conditions for reliable operation. While security matters, these solutions also simplify management by keeping configurations under a common framework. This approach addresses questions about lifecycle, support, and update cadences. Gateways turn disparate stacks into a coherent interconnected system, and devices equipped with Matter hardware can join a shared ecosystem without manual tweaks.

Management standards like OMA LwM2M and IPSO-guided profiles provide consistent device management, provisioning, and telemetry. While some deployments stay cloud-centric, many rely on edge processing and automated OTA updates to reduce failures again.

Customer outcomes improve when standards unite a broad market of compatible products, lowering cost and expanding choices. Companies that invest in open governance, regular interoperability testing, and predictable update cadences reduce failures and drive faster adoption. By treating gateways as logical bridges rather than permanent adapters, teams can scale solutions across environments over time while keeping safety and reliability.

Where should data be processed: edge, fog, or cloud in real deployments?

Process latency-critical data at the edge; offload broader aggregation to fog; reserve cloud for training and governance.

Edge empowers smarter buildings, automotive systems, and environments by keeping data close to the source. Edge devices, powered by semiconductor-grade processors, operate within tight power budgets and rely on secure enclaves to protect sensitive data. This proximity yields sub-20 ms responses for lights, access controls, and sensor fusion, while reducing network traffic and preserving offline capabilities when connectivity is limited. That ride from sensor to action is what makes edge decisions so effective, and for customer-facing apps on phones and onsite terminals, edge decisions deliver immediate feedback and a consistent user view.

Fog sits between edge and cloud, adding a regional layer that aggregates data from multiple endpoints. It nurtures a local view of operations across a campus, fleet yard, or city block, enabling pre-processing, privacy-preserving analytics, and policy enforcement that scales beyond a single device. By keeping data closer to the edge, fog reduces cloud egress by a meaningful margin and maintains low-latency coordination for multi-device environments. If you hear concerns about latency or data sovereignty, thats a prime use case for fog because it can edit models and distribute updates rapidly across devices without centralizing everything in the cloud. This three-tier approach remains valid across industries.

Cloud handles long-term storage, heavy analytics, and cross-system governance. It powers transformative insights from aggregated data, trains models with diverse inputs from thousands of devices, and provides the exclusivity of centralized security and auditability. The cloud view enables strategic planning, automotive fleet optimization, and enterprise-wide reporting, while adding scalability that would be impractical at the edge or fog alone. This balance also supports evolving technology and changing business models. For customer-facing services, the cloud delivers a single, reliable view of operations across locations. Since data volumes grow with time, cloud remains the best place for archival, historical benchmarking, and global coordination–especially when you need to compare different regions, run simulations, or share insights with customers through dashboards. Going cloud-first for non-latent workloads makes sense, but it works best when edge and fog strategies drive the real-time workload.

How did architects move from standalone apps to end-to-end IoT systems?

Adopt a platform-led strategy that unifies data, device behavior, and workflows from sensor to service, rather than patching one-off apps. This will mean fewer integration headaches and a single source of truth across edge, gateway, and cloud. Perhaps this approach also accelerates delivery by aligning teams around a common model.

Along the way, architects found that moving from a thing-centric view to end-to-end systems requires embedding compute at the edge and standardizing the underlying data streams. It doesnt require ripping out legacy apps overnight, you can migrate gradually with adapters, pilots, and incremental refactors. This creates a great foundation for scalable analytics and privacy-ready controls that always respect user consent.

In a milk processing plant, embedded sensors monitor temperature, viscosity, and flow. The thing communicates over a robust online network, and the data is processed locally on the machine before sending aggregated metrics to the cloud. This setup reduces latency, increases traceability, and supports compliant reporting across batches.

- Standardize a common data fabric across every thing, edge gateways, and cloud services, ensuring the underlying semantics are consistent and easy to reason about.

- Move intelligence to the edge and connect devices through a scalable backend, using lightweight protocols (MQTT/CoAP) and robust offline support to maintain operation when online connectivity is intermittent.

- Automate the lifecycle of devices and services, including provisioning, firmware over-the-air updates, telemetry, and safe rollback, to shorten deployment cycles without manual handoffs.

- Establish governance with a clear purview, data minimization, and role-based access to protect privacy while enabling legitimate analytics across processes and teams.

- Measure impact with concrete metrics for every layer: latency, reliability, energy usage, and data quality, and use that feedback to iterate across the stack.

- Seskupte multidisciplinární týmy kolem společných standardů, vytvořte tak rychlou cestu od konceptu k dodání a zajistěte jasné vlastnictví jak hardwarových, tak softwarových komponent.

Tato změna přináší transformační sadu funkcí, která kombinuje vloženou inteligenci s automatizovanými pracovními postupy, podporuje nepřetržitý provoz a soudržnou online/offline zkušenost. Sjednocením dat na úrovni zařízení do bezproblémové platformy architekti umožňují větší odolnost, ochranu soukromí podle návrhu a rychlou přizpůsobivost měnícím se požadavkům v různých odvětvích.

Kdy se průmyslová IoT etablovala z pilotních projektů do širokého produkčního využití?

Škálování IIoT nyní přechodem od pilotních projektů k produkčně připraveným, integrovaným systémům, které propojují senzory na hranici s cloudovou analytikou a standardizovanými rozhraními. Vytvořte jednotný, sdílený datový model a nainstalujte uživatelsky přívětivé dashboardy, abyste zkrátili dobu do dosažení hodnoty ve všech lokalitách.

V 2010. letech piloti prokázali hodnotu v řízených linkách; rok co rok mnoho lokalit kopírovalo tato nastavení. Zlom nastal kolem let 2017–2019, kdy přidání okrajových zařízení, spolehlivější internetové konektivity a nákladově efektivní polovodičové komponenty umožnily rozsáhlé produkční nasazení. Telekomunikační společnosti vstoupily na scénu s dedikovanými IIoT sítěmi, které pomáhaly závodům připojit se zpět do podnikového systému. Dominantní změnou nebylo jen technologie, ale ekosystém, který poskytuje sdílená data na místech, kde probíhají operace, integruje senzory, směrovače a analytiku do soudržného přehledu.

Slovařensky se posun týká větší dostupnosti dat, rychlejšího rozhodování a snadnější spolupráce mezi lokalitami. Selhání nás naučila tvrdým lekcím a tyto lekce zpřesnily způsob, jakým navrhujeme pro spolehlivost. Začněte s 3–5 vysoce hodnotnými případy použití, poté škálujte; vyberte platformu, která nabízí integrovanou analytiku, robustní rozhraní API a zabezpečenou výměnu dat. Řízení dat by mělo být definováno v gesci vedení závodu, s jasným vlastnictvím a kontrolami přístupu. Tento přístup snižuje riziko a urychluje adaptaci uživatelů, a zároveň udržuje předvídatelnost nákladů.

Implementace IIoT ve více lokalitách těží z konkrétních kroků: standardizace rozhraní, sladění datových modelů a školení týmů k interpretaci upozornění v reálném čase. Praktická cesta kombinuje integraci v back-office s viditelností na dílně, takže provozní týmy skutečně zaznamenávají měřitelné zlepšení v provozuschopnosti a výstupu.

| Faktor | Akce | Dopad |

|---|---|---|

| Edge-to-cloud integrace | Přijměte integrovanou platformu se senzory, bránami a analytikou; prosazujte standardizovaná rozhraní a sdílený datový model. | Rychlejší hodnota, konzistentní data v rámci stránek; jednodušší správa. |

| Správa dat | Definujte rozsah působnosti, vlastnictví dat a zabezpečení; stanovte zásady sdílení dat. | Nižší riziko a vyšší jistota při sdílení dat s partnery. |

| Připojení | Využijte telekomunikační společnosti nebo spravované sítě ke škálování spolehlivé komunikace ke všem aktivům. | Vyšší dostupnost; rychlejší uvádění do provozu v podnicích. |

| Používací strategie | Vyberte 3–5 vysoce hodnotných případů použití; iterujte a rozšiřujte na základě úspěchu. | Lepší návratnost investic; méně neúspěšných projektů. |

| Lidé a proces | Školte týmy, zarovnejte se s provozem a stanovte si měřitelné KPI (OEE, MTTR). | Udržitelné přijetí a jasné odůvodnění. |

IoT Is Not New – A Brief History of Connected Devices">

IoT Is Not New – A Brief History of Connected Devices">