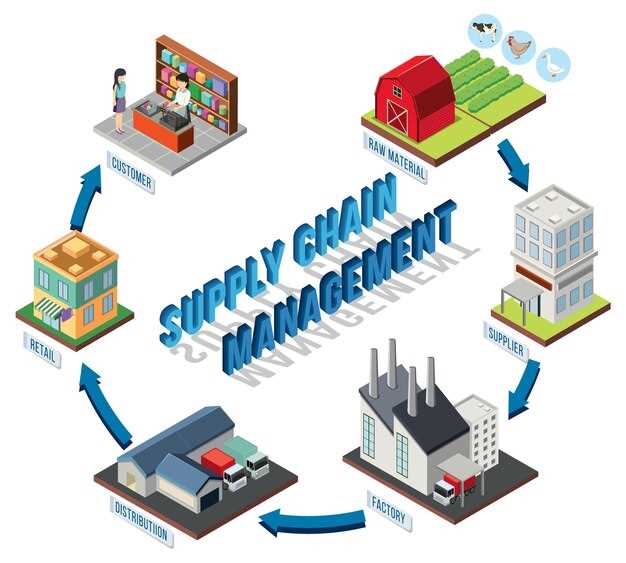

Comience implementando rastreable capa de datos entre socios para maximizar nutrición integridad, minimizing deterioro y garantizar una fiabilidad destination por bienes.

Integración admite real-time rastreo desde producción al consumidor, en consonancia con gfsi medidas y industry normas.

Desarrolladores centrarse en purpose-creado modelos de datos, siendo atento al origen y addition de registros verificables, permitiendo nutrición reclamaciones con real-time confianza. Ese poder really se traduce en la reducción del fraude y la creación de confianza en un formidable industria.

Hablando los marcos de gobernanza priorizan minimizing la exposición de datos manteniendo a la vez registros de auditoría, en consonancia con gfsi, expectativas del consumidor y medidas regulatorias.

4 Temas de debate sobre la procedencia y las operaciones de los alimentos impulsadas por blockchain

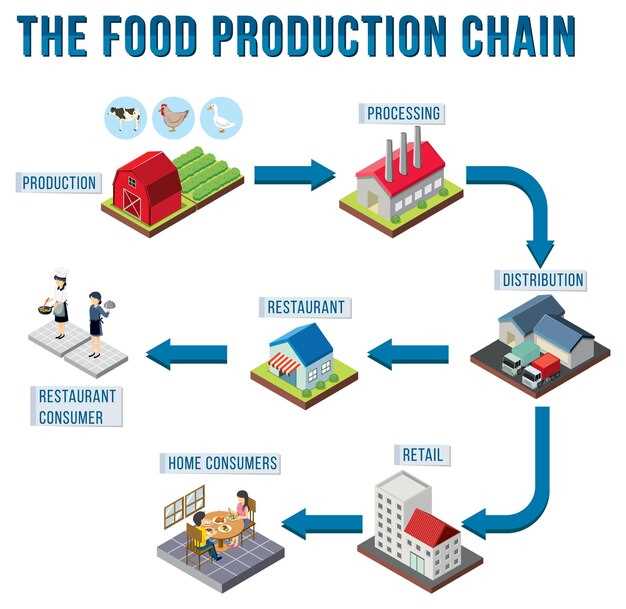

Tema 1: Construir cuatro niveles de trazabilidad utilizando tecnología de libro mayor distribuido para capturar hitos de origen, procesamiento, envasado y distribución; este enfoque produce historiales verificados y respalda la emisión de certificados en cada etapa. Los creadores y minoristas ganan confianza a medida que las consultas basadas en Google muestran datos fiables; los registros previamente confirmados proporcionan integridad de grado bancario en todas las cadenas, lo que permite la generación de documentos sin problemas y las comprobaciones automáticas para el cumplimiento necesario. Años de práctica demuestran su relevancia en todos los mercados, y los autores y creadores deben alinearse en los estándares de datos para mantener la coherencia de los beneficios futuros.

Tema 2: Automatizar los flujos de documentos para permitir comprobaciones rápidas y verificadas de la integridad en bloques de actividad tipo legos desde las granjas hasta los minoristas; esto reduce las idas y venidas, acelera el control de calidad y reduce las tasas de error. La emisión de certificados puede desencadenar comprobaciones automáticas cuando los datos cumplen ciertas normas. Los equipos interfuncionales pueden etiquetar los cambios con los ID del autor y del creador, mientras que la indexación al estilo de Google facilita una trazabilidad rápida.

Tema 3: Evaluar la eficacia mediante el seguimiento del tiempo de verificación, las tasas de error y el tiempo de ciclo en las cadenas de custodia que abarcan productores, empacadores, transportistas y minoristas. Con el paso de los años, este enfoque basado en datos produce reducciones constantes en el desperdicio y las retiradas del mercado. Las métricas previstas incluyen el costo por unidad, las tasas de retorno y la confianza del cliente. Los contextos difíciles, como los diversos regímenes regulatorios, exigen registros interoperables y certificados estandarizados.

Tema 4: El diseño de la gobernanza involucra a autores y creadores junto con minoristas, auditores y reguladores; establece niveles de acceso, responsabilidades y políticas de retención de datos idénticos para mantener los mismos estándares en todas las jurisdicciones. Los controles de grado bancario más un marco de riesgo conceptual garantizan el cumplimiento en todos los mercados. El trabajo futuro debe alinearse con los grupos de la industria y los organismos de normalización; las normas establecidas anteriormente proporcionan relevancia para la implementación. Las rutinas de auditoría automatizadas y los flujos de trabajo modulares construidos con legos garantizan el cumplimiento y la escalabilidad, mientras que los canales abiertos ayudan a una rápida adaptación.

Trazabilidad integral con blockchain e IoT

Recommendation: Implementar un esquema de registro por lotes vinculado a sensores, utilizando un libro mayor confiable para capturar cada evento desde el origen hasta el minorista, asegurando que los datos no puedan ser alterados sin ser detectados y manteniendo los registros de calibración para los pasos relacionados con la seguridad.

Comience con una arquitectura mínima viable: adjunte RFID o QR a cada palé, despliegue sondas de temperatura y humedad, utilice GPS para el movimiento y envíe los datos a pasarelas perimetrales que realicen comprobaciones iniciales antes de la compilación honesta en entradas de libro mayor.

Los resultados de los programas piloto demuestran que la vinculación de los datos de los sensores con los metadatos de los certificados reduce el riesgo de tergiversación, mejora la preparación para la recuperación y eleva la confianza del cliente. En los análisis de la era Covid, la visibilidad inmediata redujo los incidentes de pérdida de existencias entre un 20 y un 35 % en las rutas probadas.

El grado de mejora depende de la calidad de los datos; los factores clave incluyen la calibración del dispositivo, la sincronización de tiempo y la frecuencia de compilación de datos. Los resultados obtenidos dependen de 3–5 segundos constantes para las operaciones de anexión en la puerta de enlace, con sellos a prueba de manipulaciones para los eventos de empaquetado.

En la práctica, nunca confíes en una sola fuente; ni el proveedor ni la instalación deben alojar todos los registros. Crea un conjunto distribuido de recolectores y verificadores, permitiendo comprobaciones relacionadas con la seguridad, como excursiones de temperatura, humedad e integridad estructural. Esta colección apoya la presentación de informes orientados al mercado, lo que permite a los clientes ver el linaje, las señales de riesgo y el estado de cumplimiento.

La experiencia de casos en los que la COVID-19 interrumpió los flujos revela que eliminar las lagunas de datos y el etiquetado incorrecto ahorra tiempo durante las crisis. Un sistema de vinculación bien estructurado permite una investigación rápida de por qué un lote falló, facilitando correcciones específicas en lugar de retiradas generales.

Ejemplos de redes de la región de Tian muestran beneficios medibles: reducción del 40–50 % en el etiquetado incorrecto, tiempos de respuesta más rápidos y una experiencia más clara para minoristas, corredores y consumidores.

Pasos del flujo de datos: capturar > verificar > compilar > publicar; cada paso añade un grado de garantía. Si los datos que se recogen carecen de un origen verificable, aumenta el riesgo de tergiversación; por lo tanto, los diseñadores deben incorporar firmas digitales y no repudio en los registros de eventos. En la práctica, los creadores de etiquetas de embalaje deben firmar certificados para evitar la suplantación de identidad.

Las métricas mostradas de los programas piloto incluyen tiempos de respuesta 28–44% más rápidos, 15–30% menos pérdidas debido a daños y mayor confianza entre los clientes.

Este enfoque ayuda a reformular la gestión de riesgos, eliminar los silos de datos y posibilitar la mejora continua en todos los segmentos del mercado.

Automatización de los procedimientos de retirada mediante contratos inteligentes

Implementar disparadores de recuperación automática mediante acuerdos auto-ejecutables que vinculen los ID de lote a los eventos de estado actuales y las actualizaciones de transporte; los contratos hacen cumplir las órdenes de retención en las redes de socios de forma inmediata.

La orquestación eficaz de retiradas reduce el riesgo de que las enfermedades se agraven.

En esencia, un modelo centralizado pero interoperable acelera la contención, reduciendo las pérdidas que se pronuncian cuando las enfermedades se propagan y el producto toca múltiples nodos.

Los resultados evaluados de múltiples pilotos confirman ganancias empíricas: aislamiento más rápido, fuentes transparentes y pérdidas reducidas, manteniendo la integridad de los datos.

El uso de valores nonce previene la reproducción y apoya la auditabilidad; los flujos de datos desde el punto de origen hasta los nodos de distribución fluyen con una fricción mínima.

Los flujos de datos proporcionados desde el ERP, los laboratorios de calidad y los sensores de transporte impulsan los puntos de decisión; las estrategias incluyen activadores de retirada, avisos de retención y notificaciones al proveedor.

Además, al abarcar también a proveedores, procesadores y minoristas, este enfoque ofrece visibilidad del estado actual y una evaluación dinámica de riesgos.

El punto de contacto para la evaluación se basa en características como la trazabilidad, el tiempo de respuesta y la precisión de la contención. Los resultados evaluados, el valor actual entregado y los hallazgos empíricos guían el ajuste continuo de las estrategias.

| Step | Data Source | Trigger | Acción | KPI |

|---|---|---|---|---|

| Ingestión | ERP, LIMS, WMS | cambio de estado | almacenar evento, calcular nonce | latencia, precisión |

| Assessment | flujos de sensores | señal de enfermedad | marcar lote, notificar a las partes | velocidad de recuperación |

| Execution | libro mayor de contratos | riesgo evaluado | suspender asunto, alertar a las fuentes | reducción en la pérdida de artículos |

| Después de la retirada | registros de auditoría | completion | Trazabilidad de documentos | tasa de cumplimiento |

Estándares de Datos de Procedencia para la Transparencia de la Granja a la Mesa

Adoptar estándares de datos de procedencia interdominio que armonicen la atribución de la fuente, la codificación de eventos y los registros auditables en todas las etapas, con el respaldo de una política publicada que ofrezca claridad a los participantes.

Definir un lenguaje canónico para la codificación de eventos que permita la interoperabilidad entre bases de datos y un sitio web, con esquemas claramente documentados y un conjunto de implementaciones de referencia.

Desde que surgieron los estándares de datos, casos notables demuestran el valor de la publicación interoperable de eventos de procedencia a través de nodos agrícolas, de procesamiento y minoristas. La principal guía metodológica de bouzdine-chameeva y treiblmaier informa las reglas prácticas para la publicación de datos de procedencia, la política de retención y el control de acceso, lo que permite la reducción de riesgos y la rendición de cuentas.

La política debe especificar un lenguaje canónico para la codificación de eventos, proporcionar implementaciones de referencia en varios lenguajes, publicar estos artefactos en un sitio web central y definir mecanismos de publicación para minimizar las brechas de interpretación y la desalineación de datos.

Finalmente, construya una verificación impulsada por la inteligencia, reducción de errores y automatización a través de la robótica en los puntos de origen para fortalecer la capacidad de validación de la fuente y la resistencia a la manipulación, al tiempo que se respalda el seguimiento de auditoría.

Monitorización en tiempo real de la cadena de frío en un libro mayor compartido

Implementar sensores IoT en cada tramo y enviar las lecturas a un libro mayor compartido con pruebas generadas automáticamente; configurar la ingestión fluida, eventos con marca de tiempo y alertas de umbral para responder en cuestión de minutos.

Esta configuración aborda un desafío central: la integridad de los datos entre múltiples participantes. Utilice un mecanismo con permiso con acceso basado en roles, firmas criptográficas y comprobaciones de consenso para frenar la manipulación y reducir la vulnerabilidad. Los participantes de confianza auto firman las alertas de estrés por calor o frío, creando un registro auditable que desalienta el fraude y preserva la reputación.

Las mejoras estimadas incluyen una reducción de residuos del 15–25% por excursiones de temperatura, retiradas del mercado un 30–40% más rápidas y mejores resultados para los clientes con una procedencia verificable. Los avances en la precisión de los sensores, la normalización de los datos y la interoperabilidad entre socios reducen las complejidades a medida que los datos fluyen desde la fuente hasta el usuario final con una latencia mínima, lo que permite una toma de decisiones fluida. Los paneles brillantes traducen las métricas en información útil.

Imagine un escenario en el que una sola excursión desencadena flujos de trabajo automatizados de retención e inspección; si un sensor informa de condiciones fuera de rango, los envíos se ponen en cuarentena y se ajusta el enrutamiento, los socios posteriores reciben alertas fiables y los registros a prueba de manipulaciones proporcionan pruebas, que muestran las vulnerabilidades previamente ocultas abordadas y los resultados confirmados.

Para empezar, ejecute una prueba piloto controlada en corredores selectos con 3 a 5 socios; defina el esquema de datos y una regla de intercambio que priorice la privacidad; elija un proveedor de confianza con una postura de seguridad clara; supervise los eventos señalados e itere en la calidad de los datos. Céntrese en reducir la vulnerabilidad, como la deriva de la calibración del sensor, las interrupciones del dispositivo y la entrada manual de datos, estableciendo una respuesta rápida a los incidentes y mejoras continuas. Esto traslada la atención de las comprobaciones tradicionales a cosas que antes causaban retrasos. Este enfoque conlleva beneficios medibles.

Interoperabilidad transfronteriza entre proveedores y minoristas

Adoptar el intercambio transfronterizo de datos con blockchain-gs1 para habilitar la visibilidad en tiempo real entre distribuidores y minoristas. Este cambio genera una amplia transparencia, reduce los silos de información fragmentada y fomenta una colaboración coherente entre las partes involucradas. Este enfoque produce ventajas reales en velocidad y precisión.

Definición de elementos de datos comunes usando GS1 Los estándares alinean los identificadores, los números de lote, las fechas de caducidad y los eventos de ubicación a través de las fronteras. Empareje los datos con las marcas de tiempo de los eventos para respaldar retiradas precisas y respuestas rápidas a nivel de tienda. El procesamiento de datos eficiente a través de la validación de streaming reduce la latencia.

Incorporar a distribuidores, minoristas, transportistas y reguladores como participantes con acceso a la información basado en roles, permitiendo un intercambio de confianza sin exponer detalles confidenciales.

La ausencia de datos interoperables se aborda estableciendo una capa de datos compartida, lo que permite consultas coherentes, gestión automatizada de excepciones y un rendimiento más fiable en todos los mercados. Abordar la ausencia a través de un enfoque basado en estándares garantiza la escalabilidad.

La protección de detalles confidenciales a través de acceso autorizado, cifrado y registros inmutables; la evidencia de los programas piloto muestra una mejor respuesta a incidentes, trazabilidad y rendición de cuentas. La información obtenida de las pruebas de campo ayuda a adaptar los modelos de acceso y las vistas de datos a las necesidades regionales.

La aparición de estándares transfronterizos reduce las verificaciones manuales y acelera la incorporación de nuevos socios. Implemente este marco utilizando una ruta de tres fases: establecimiento de la gobernanza, prueba piloto con socios seleccionados y ampliación. Supervise indicadores clave como el tiempo de ciclo, la tasa de error y el coste por artículo para verificar las mejoras de eficiencia y justificar un uso más amplio.

Recomendaciones basadas en evidencia para la alineación de políticas: adoptar el intercambio de datos que preserve la privacidad, garantizar salvaguardias orientadas al consumidor y publicar KPIs estandarizados. Los beneficios sostenibles requieren una gobernanza que alinee los incentivos. Operar de forma sostenible en todos los corredores impulsados por sistemas innovadores e interoperables para abordar las necesidades a largo plazo.

Cada movimiento de un artículo se almacena como una cadena de eventos en blockchain-gs1, lo que permite a los gerentes de tienda verificar el origen y el estado del producto en cualquier etapa.

Privacidad de datos, cumplimiento y auditabilidad en los sistemas alimentarios de blockchain

Recomendación: Implementar el intercambio de datos que preserve la privacidad con acceso basado en el consentimiento, almacenamiento fuera de la cadena de bloques para registros confidenciales y pistas de auditoría inmutables en un registro distribuido; estas medidas permiten la verificación al tiempo que mantienen los datos protegidos.

- Arquitectura de la privacidad: adoptar pruebas de conocimiento cero para validar atributos sin exponer detalles confidenciales; seudonimizar identidades; minimizar los datos recopilados; capturar el consentimiento con registros a prueba de manipulaciones; los paneles proporcionan imágenes llamativas que muestran permisos, estado y actividad relacionada a través de días y situaciones; este enfoque aumenta la fiabilidad y protege a los consumidores; los estudios demuestran que el riesgo disminuye cuando los datos relacionados con la seguridad permanecen protegidos durante la producción y la distribución.

- Mapeo de cumplimiento: alíneese con el RGPD, la CCPA y las directrices específicas del sector; defina flujos de trabajo claros para los derechos de los interesados; implemente comprobaciones de políticas automatizadas; mantenga registros de evidencia que demuestren el cumplimiento ante los reguladores; Karen, del departamento de cumplimiento, señala las mejores prácticas en los flujos transfronterizos; las prácticas actuales muestran auditorías más fluidas en días de supervisión continua.

- Auditabilidad y verificación: almacenar registros inmutables y a prueba de manipulaciones; generar informes automatizados para equipos internos y auditores externos; ofrecer portales de transparencia orientados al consumidor que revelen atributos verificados sin exponer detalles; por el contrario, el no separar los datos aumenta el riesgo; enfatiza interfaces seguras y el intercambio controlado de datos; los equipos de producción acceden a la información necesaria a través de vistas basadas en roles, mientras que los humanos revisan las anomalías señaladas.

- Personas, procesos y gestión de riesgos: asignar responsables de datos en todas las disciplinas; implementar capacitación en privacidad, seguridad y cumplimiento; aplicar la transformación del manejo de datos en pasos medidos y auditables; estudios en curso muestran mejores resultados cuando los humanos permanecen involucrados en los puntos de decisión; actualmente, los ciclos de revisión de riesgos se ejecutan simultáneamente con los controles de producción; el caso de Karen destaca la importancia de las auditorías rutinarias y las rutas de escalada claras.

En las entrevistas, Karen destaca la recepción positiva del personal de primera línea cuando los controles de privacidad aparecen en las operaciones diarias.

How Blockchain Is Revolutionizing the Food Supply Chain">

How Blockchain Is Revolutionizing the Food Supply Chain">