Aloita with a defined baseline for core processes; this will align measurement to customer value, enabling clear progress.

Exabytes of data drive decisions; going beyond siloed systems, the objective is defined, failure risk lowers, value to customers increases.

To avoid waste, establish a baseline for data size; governance ownership, cross-functional collaboration, defined roles.

With c-suite backing, translate data into actions; those leaders must align budgets, priorities, milestones.

To begin this work, ensure the baseline is accurate; the size of data matters, customer outcomes improve when done.

Käyttämällä cross-functional mapping, teams convert insights into measurable actions; those actions matter for service levels, costs, resilience, customer satisfaction.

For those going forward, define success metrics, keep the baseline current, avoid siloed knowledge leakage, ensure the customer remains the focal point.

Rather than chasing broad hype, this framework must be adopted together by operations, finance, commercial teams to deliver tangible definition of success, at least for a defined baseline.

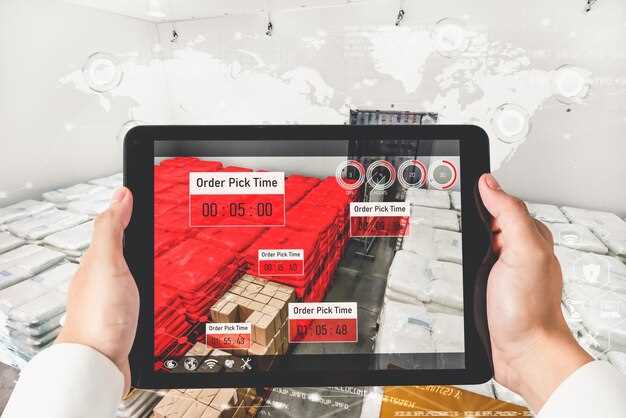

Key Updates Driving Global Supply Chain Visibility

Recommendation: implement a unified data fabric that ingests unstructured feeds and structured metrics from devices, warehouses, and carriers to drive real-time visibility. While most organizations are going for the best balance between speed and governance, above all you need a defined data model that tracks events across sessions and devices to understand behavior, where data matter and there are failure points that can be detected early, so you can take action.

Knowing where unstructured signals map to metrics matters, and the same framework works across most use cases. To be concrete, plan for gigabytes of telemetry per day and configure retention defined by business needs. Proposed governance should include data lineage, access controls, and audit trails to prevent failure due to misinterpretation. Track progress by metrics such as on-time data arrival, event latency, and confidence in anomaly alerts; this helps answer where issues originate and what action is required. This approach is conceived to scale with growing data streams.

Implementation Steps

Implementation steps: start with a defined set of core events, map to standardized schemas, and enable edge-to-cloud sessions across devices and facilities. Then establish a unified data store capable of handling gigabytes per day and providing fast queries for operators and planners. Above all, implement an alerting model that flags deviations in behavior early and triggers automated remediation where feasible. Generally, align multi-party data sharing agreements and ensure data quality rules are enforced to prevent misalignment. Most importantly, maintain a single source of truth, with clear ownership and documented metrics definitions, so teams can track progress and reduce failure modes.

Leveraging Real-Time Data for Inventory Decisions

Implement a real-time data loop that refreshes stock positions every 15 minutes across stores, DCs, and online channels, and auto-adjust purchasing for top SKUs. This simple, measured approach reduces stockouts and excess inventory while speeding execution.

Establish a lightweight backbone that ingests POS, WMS, ERP, supplier portals, and IoT signals into a streaming layer. Target sub-15 minute latency for fast-moving items and sub-60 minutes for steady items. Theyre more reliable when aligned to a single data model and clear ownership, which keeps teams performing with confidence throughout the organization.

Unstructured inputs from suppliers, emails, and notes require normalization and tagging, with NLP-assisted extraction to convert text into usable fields. Create a learning loop that captures stakeholder feedback and translates it into concrete rules stored in a centralized ruleset that purchasing, planning, and operations can reuse. image source: unsplash

Executive sponsorship and stakeholder alignment across purchasing, planning, logistics, and merchandising are essential. Theyre responsible for setting guardrails, approving thresholds, and ensuring governance. Use the same dashboards for all audiences, with role-based views that keep visibility tight without overload.

Moving from intuition to data-driven decisions happens through a staged approach. Start with three pilot projects covering fast movers, seasonal items, and a control category, then scale after hitting defined targets: stockouts down 20–40%, service level improvements of 5–12 points, and turns lift of 1.2–1.6x.

- Cadence and backbone: implement streaming ingestion, define latency targets, and establish alerting for breaches; align data definitions across locations.

- Data quality and unstructured signals: normalize fields, tag attributes, apply NLP to supplier documents, and maintain a living data dictionary; assign data owners (stakeholder teams).

- Decision rules and calculations: use a transparent rule set with formulas like ROP = demand_rate × lead_time + z × sigma × sqrt(lead_time); experiment thresholds to balance service and carrying costs.

- Governance and learning: schedule quarterly reviews with executives and teams; capture lessons from each cycle; update rules and thresholds accordingly.

- Pilot to scale: define success criteria, monitor the same KPIs, and document learnings for roll-out across categories and channels.

- Outcomes and measurement: track purchasing cycles, days of supply, inventory velocity, and gross margin impact; report moving improvements to stakeholders with clear visuals.

Nearshoring vs Offshoring: Quick Decision Guide

Recommendation: Nearshoring wins when speed to market, audience buy-in, stock health matter most; offshoring wins when cost advantage in distant locations dominates, tolerance for longer lead times exists.

Definition: Nearshoring places production within a short geographic radius of customers; offshoring relocates functions to distant regions to reduce cost.

Parameters to compare: important metrics include cost per unit, lead times, stock health, regulatory risk, currency volatility, talent availability, IP protection, time zone alignment, quality control, vendor readiness.

Action plan: collect data quickly; read market reports; define goals; measure requirements; set failure thresholds; secure buy-in from audience stakeholders; determine the optimal mix using defined parameters.

Practical guardrails: align with customer expectations; measure response times; stock availability; service levels; nearer location improves stock health; the sciences of logistics support risk assessment; keep parameters transparent; possible outcomes include improved response times; readouts help the audience track goals.

Huom.: this decision framework preserves flexibility; it remains relevant when market conditions shift; commit to a defined set of requirements; metrics drive quarterly reviews to keep customer health metrics aligned.

AI, Analytics, and Digital Twins for Demand Forecasting

Starting with a 12-week pilot that deploys AI-driven demand forecasting via digital twins, use real-time sensors and integrations to address variability, answer critical questions across the distribution network, and empower stakeholders looking for faster decisions. Expect gains in forecast accuracy of 15–25% and reductions in stockouts of 5–15%, with possible upside as data quality improves. Begin with a single product family and expand incrementally, tracking milestones as tasks are completed. Starting small helps ensure the path to broader rollout stays manageable.

Data foundation: connect core planning data, POS feeds, supplier calendars, and shipment records via a lightweight integration layer. Use sensors for real-time signals and feed digital twins that simulate demand and replenishment under different scenarios. Between the distribution points, the model estimates service levels and inventory positions, enabling rapid what-if analysis and proactive adjustments that prevents drift and misalignment.

Analytics approach: using Bayesian updating, ensemble time-series models, and probabilistic scenarios to generate forecast intervals. Digital twins run in parallel and update as new sensor data arrives, looking for improvements that stakeholders can act on. The result is a tighter alignment and faster, data-driven decisions that help businesses set expectations and respond quickly.

Organizational alignment and governance

Establish an initiative with cross-functional sponsorship; define owners and address data quality, privacy, and security. Integration across technical stacks should follow standards and best practices; Acharya notes that alignment across departments resembles guiding a horse along a narrow path, emphasizing simple, repeatable steps and visible wins. The starting point is a minimal viable model, and the team can address lessons learned, make the plan iteratively better, and ensure tasks are done on schedule. This might require cultural shifts, but the payoff is helpful for organizations seeking reliable demand signals and improved inventory performance.

ESG, Compliance, and Supplier Risk Monitoring

Recommendation: deploy a single ESG, compliance, supplier risk monitoring framework that aggregates data streams from procurement, finance, sustainability, QA; configure analytics to assign risk scores; produce a complete report for managers; start with small, technical projects; apply agile workflows to speed response; rely on prior experience to calibrate thresholds. This approach probably shortens cycles by compressing feedback loops.

Looking at current data, those measures give managers visibility into every tier of the supplier base; analytics-driven views create a concise experience; streams reveal negative events, problems, compliance drift; perhaps looking at high-spend categories more frequently highlights opportunities; ounce of prevention save budget by reducing remediation here. This can become a scalable solution.

Mittauskehys

Key components include data provenance; thresholding; alerting; remediation workflows; sufficiently granular data across suppliers, categories, geographies; actionable signals trigger response, action; escalation procedures; these steps reduce failure risk, boost resilience, support continuous improvement; clearly defined ownership improves accountability.

| Lähde | Toiminta | KPI |

|---|---|---|

| ESG ratings | aggregate, normalize, alert | risk score 0–100 |

| Vaatimustenmukaisuuden rikkomukset | perussyyanalyysi, korjausten seuranta | keskimääräinen sulkupäivien määrä |

| Taloudellinen hyvinvointi | jatkuva seuranta, luottoriskisignaalit | DSCR, ostovelkojen keskimääräinen maksuaika |

| Operatiivinen riski | ennakoivat signaalit, häiriöhälytykset | keskimääräinen palautumisaika |

Don’t Miss Tomorrow’s Supply Chain News – Key Updates and Trends">

Don’t Miss Tomorrow’s Supply Chain News – Key Updates and Trends">