Recommendation: Verify safety claims with independent statistics before any broad rollout; treat corp promises as grounds for verification, not gospel; assumptions requiring public consent must be allowed only after evidence.

Most observers demand clarity where perception diverges from reality. Signage, marketing, public briefings often blur risk. A rigorous approach aligns rear-camera reports with cabin data, proving what is true on highways when speeds escalate.

Be cautious of advertisement claims spotlighting dramatic success; underlying grounds for trust lie in controlled trials, not glossy narratives. Note statistics from independent testers show mixed results, with some tests passing fail-safe checks while others reveal fatally low margins under stress.

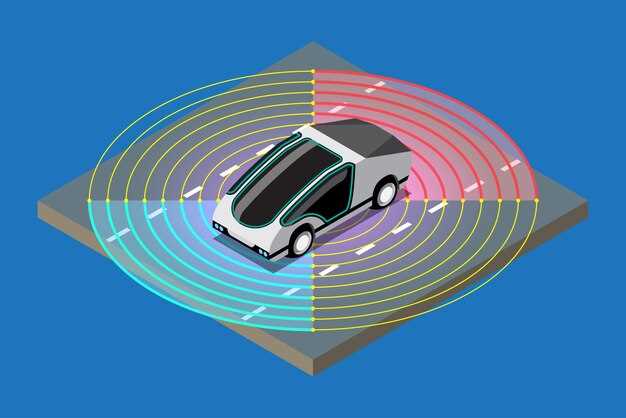

Most problems arise when guidance systems mis-handle urban quirks: erratic pedestrians, slick surfaces, roadworks. corp processes require permanent fail-safes; if sensors misread, steel gear must prevent fatally wrong actions. Design teams must ensure gear coverage remains robust across rear, front, sides.

Public discourse should understand that results published in corp campaigns wont reflect complex edge cases. A practical path: implement roadside monitors, rigorous validation, post-deployment reviews; note lessons learned, share progress openly; most improvements require iterative cycles, not a single release.

Inspiration for best practice arrives from unrelated industries; a note from mcdo shows reliability over spectacle; risk reduction relies on steel components, rigorous testing, permanent safeguards; plan should limit exposure on busy highways until verification passes.

When done correctly, reforms yield measurable improvements: rear alerting, redundancy, rigorous fault analysis; most experiments confirm safety improvements when metrics are transparent, repeatable, publicly available.

Note momentum shifts; families relying on safer transport expect predictable performance on highways; reflection shows this requires transparent reporting, not sensational advertisement or quick fixes.

Rear sensors, gear redundancy, fail-safe protocols should be treated as standard, not optional; remember most permanent improvements rely on discipline rather than charm.

forget nothing: safety culture demands ongoing audits, transparent data feeds, cross-border replication; decisions should rely on statistics, not spontaneous impulses.

Information Plan

Adopt a data-first governance model focused on velocity targets; incident counts; goods flow.

Publish a blueprint in a public forum; align everyone with clear terms; metrics visible; responsibilities assigned.

Here is a concrete path youd use right away to reduce risks in york deployments.

Key metrics include velocity consistency across routes; rear collision rate; hitting avoidance rate; times observed; mentioned in safety reviews; high reliability goals; unlike manual driving, automated checks reduce variance.

Data should be cleaned; shared in a common language; chain of custody documented; access controls defined; audits scheduled quarterly; improvements wanted by regulators.

Aviation practices offer a benchmark; audits independent; red-team tests; avoid terrible misinterpretations; transparent reporting of near misses; lessons translated into code.

In riding scenarios, reasonable expectations guide policy; avoid sensationalism; whatever happens, data-driven reviews prevail. york community forums host talks; everyone knows difference; claims versus measured results; velocity, reverse maneuvers, riding events recorded; youd see rear alerts when hitting risk thresholds; here reports summarize performance variations across entire routes; youd know where to start.

What the driver-error myth misses in autonomous vehicle safety data

Recommendation: anchor risk assessment in multi-source incident data tied to four-dimensional context from front sensor readings, road geometry, weather, pedestrian density; apply linear polynomial models to detect interaction effects; run tests across device portfolios; automakers read these results to drive safer designs.

Misses include pre-crash sequences; proponents emphasize richer incident labeling; meantime near-miss reports remain inconsistent; incidents signals reflect post-crash outcomes rather than initiating faults; pedestrians near front path cause hitting events with diverse effects; read results from home devices could extend coverage; still, data quality remains difficult.

Four actionable steps address gaps: uplift telemetry sharing across automakers; require independent audits modeled after aviation; implement four-dimensional dashboards; promote owner reports via home device kits.

| Context | Métrique | Observation |

|---|---|---|

| Front sensor fusion accuracy | Linear residuals | Most incidents linked to front-path misreads; four-dimensional framing reduces noise |

| Behavioral model effects | Polynomial vs linear | Nonlinear interactions appear when pedestrians cross front path; snail-crawling progress indicates reporting gaps |

| Data sources | Coverage | Home devices add data; maximum gains occur when lawyers, tools, inspectors cooperate; data quality remains difficult |

Bottom line: four-dimensional view yields greater reliability than snail-crawling reports; investment in device tests, aviation-style scrutiny; legal oversight helps reduce incidents; befor policy decisions, engineers must separate device fault from context; this benefits vehicle safety by clarifying front-path reactions; read effects.

How Uber’s fatal crash shifted industry trust and risk perceptions

Implement independent safety audits after fatal incidents to recalibrate risk assessments. Tempe case exposed contact; a pedestrian struck by volvo auto in a test program triggered immediate suspension of activity. Investigation findings from authorities stated that misread scene context by a test system contributed to the outcome. Makers of policy guidance faced scrutiny, prompting scope expansion for standard reviews.

Turn in risk perceptions followed public announcements; according to studies, confidence shifted toward demanding rigorous verification instead of assuming flawless operation. Expect divergence among stakeholders: extreme optimism by some parties, extreme caution by others. Positive signals from makers contrasted with warnings from regulators, encouraging independent evaluation with meter-based performance checks.

Contact among parties should be formalized; regulators, lawyers, makers share raw data, incident narratives, risk models. Investigation records need to be accessible; this reduces false narratives, countering argument that infallibility exists. Here guidance rejects guess-based claims, relying on evidence.

Turn next steps into concrete practice by codifying exposure metrics; launching studies; establishing contact with regulators; volvo input yields guidance that shapes risk models.

Schneier on Security: applying threat modeling to AV deployment

Recommendation: implement a lightweight threat modeling framework at kickoff; STRIDE-inspired steps; focus on pedestrians, intersections, data flows; ensure risk prioritization before rollout.

Asset-map begins with ground sensors, vehicle controllers, cloud database, plant redundancies, emergency response channels. Threats categorized using STRIDE: spoofing, tampering, repudiation, information disclosure, denial of service, elevation of privilege. Focus on real-world patterns in fractured urban areas; majority of incidents originate at ground-level interfaces such as taxi routes, crossings, bus corridors. Provide metrics that allow prioritization across stakeholders; ensure area-specific controls around pedestrians. Interviews with field staff reaching back to frontline operations reveal gaps.

Deploy a psionic anomaly detector that uses cross-source signals from sensors, vehicle controllers, backend database; reveals patterns lacking routine safety checks. This model supports a variety of risk profiles across city districts; ground truth derived indicators guide responses. Meanwhile, coordination with manufacturers, regulators, municipal teams helps protect vulnerable zones; deal framing with suppliers clarifies risk transfer. There remains room for improvement across loops in data supply.

Alignment with procurement requires required milestones; meantime testing occurs in selected areas around taxi corridors, school zones, commercial districts; headquarters review boards synthesize results, publish dashboards for politicians, residents to gauge progress. Risk budgets anchor design choices, ensuring sufficient resources, Safer defaults designed; reserved capacity ensures resilience during peak loads.

Benchmark against competitors; sold devices provide baselines; theres room for optimization in sensor fusion; doddering risk narratives slow progress; realy rigorous tests across taxi corridors, pedestrian zones, campus routes improve safety. Wonder about residual risk; performance targets set at sufficiently high levels, enabling safer navigation for majority of users, including vulnerable pedestrians in crowded spaces.

LORINC analysis: Sidewalk Labs’ governance, testing, and transparency gaps

Recommendation: establish independent governance board, publish testing plans on open platforms, require external validation. Given political pressure, this approach helps folks, engineering teams, regulators focus on safer outcomes. doug highlighted need for better governance that stops internal drift; spend resources on open documentation; proponents observe that probability of failure drops with transparent coefficients of risk. Open logs show function of each decision, edge case handling, turbulence risk.

Gap one: lack of independent audit invites actors with divergent incentives. Another risk arises as framework lacks transparent block on budget allocation; open access to minutes remains limited. Multi-stakeholder governance requires gender-balanced representation; experienced fellows from neighborhoods, environment, law enforcement, safety, transportation consulted. This mix improves legitimacy, reduces blind spots.

Testing gaps include heavy reliance on internal coefficients, limited external pilots, data gaps across edge cases, failure to simulate turbulence in dense traffic. Lack of independent safety case reviews cannot claim robust risk metrics. doug notes airline safety practices offer a blueprint for layered verification; external peers provide credibility to probability estimates.

Transparency gaps: dashboards hidden behind portals, metrics buried in internal memos, bloodless summaries insufficient for public scrutiny. Open waterfront metrics show real-world impact; show failure logs, remediation steps, cadence of updates. Gender reporting integrated with performance signals; fellows, residents, police stakeholders gain trust through open review. Proactive disclosure blocks misinformation, boosts story credibility; critics can participate, propose changes in real time.

Action plan: set 120 day timeline for governance reform, publish public dashboards, convene monthly open sessions, block responsibilities across actors, integrate police oversight; spend budget with quarterly audits; deliver narrative report detailing failures, lessons, next steps. This story aims to rebuild trust among waterfront residents, engineers, fellows, gender groups, folks; hopefully, continues improvements.

NTSB findings and regulatory implications for driverless car investigations

Recommendation: maybe centralized, auditable data streams; require independent, machine-centric investigations; compel disclosure of testing figures; this move frames regulatory response as legal, measurable; worthwhile; hopefully understood across agencies; everything considered, saved lots of time, avoided fatally unsafe outcomes, face problems with clear answer.

- Findings indicate many investigations trace machine-level causes rather than operator mistakes; policy should start with fail-safe design, robust validation, transparent incident trails; responsibility allocation must be explicit.

- Regulatory implications include public incident repositories, standardized reporting formats, mandatory sharing of sensor logs, software versions, test environments; these steps saved resources, avoided duplicated efforts, improved clarity for court reviews.

- Meantime, risk assessment levels require statistically significant data; criteria must include injury severity, property damage, near-misses; figures from reported cases inform safer thresholds regulators can enforce; lots of passing incidents supply context which regulators can compare.

- Testing protocols should cover simulated stress tests; closed-course demonstrations; real-world pilots; this approach reduces unsafe outcomes; machines demonstrate reliability across varying conditions; vexed safety questions remain, which require measured responses.

- Enforcement tools comprise recall authority, civil penalties, corrective action plans; regulators require traceable mitigations before scale-up; legal pathways for accountability become clearer; this framework addresses injury risk and supports accountability where injuries occur.

- Policy must address equity concerns for folks exposed to different risk levels; aim remains delivering safer deployment while fueling innovation; start from shared mission, measure progress, report results; this supports a dream tied to mission, saved by data.

- Investigations should begin with machine performance, face data integrity, testing outcomes rather than focus solely on operator actions; meantime, lessons learned published in reports support a learning loop informing legal cases, future upgrades.