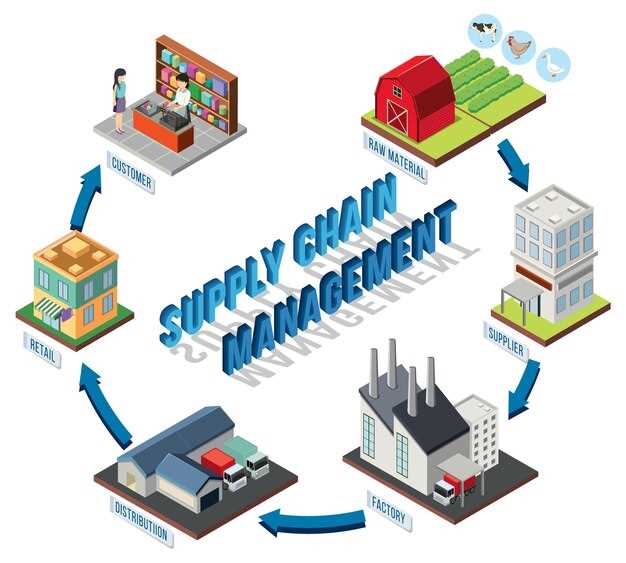

Begin by implementing 追跡可能 data layer across partners to maximize nutrition integrity, 最小化する spoilage, and secure reliable destination for goods.

統合 サポート real-time tracing from 生産 to consumer, aligning with gfsi measures and 業界 standards.

Developers focus on 目的-built data models, being mindful of provenance and addition of verifiable records, enabling nutrition claims with real-time confidence. That power 本当に translates into reducing fraud and building trust across a formidable industry.

Talking governance frameworks prioritize 最小化する data exposure while maintaining audit trails, aligning with gfsi, consumer expectations, and regulatory measures.

4 Discussion Topics on Blockchain-Driven Food Provenance and Operations

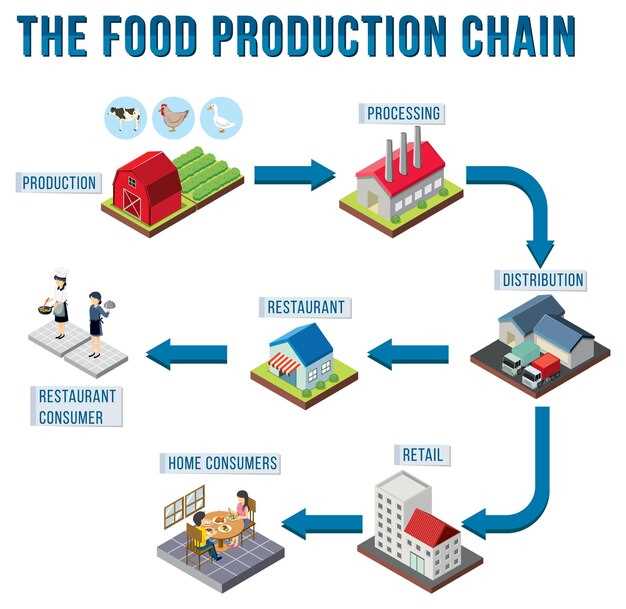

Topic 1: Construct four levels of provenance using distributed ledger tech to capture origin, processing, packaging, and distribution milestones; this approach yields verified histories and supports certificate issuance at each stage. Creators and retailers gain trust as google-based queries surface trusted data; previously confirmed records provide bank-grade integrity across chains, enabling seamless document generation and automatic checks for necessary compliance. Years of practice show relevance across markets, and author and creators must align on data standards to keep future benefits consistent.

Topic 2: Automate document flows to enable rapid, verified integrity checks across legos-like blocks of activity from farms to retailers; this reduces back-and-forth, accelerates QA, and cuts error rates. Certificate issuance can trigger automatic checks when data meets certain standards. Cross-functional teams can tag changes with author and creator IDs, while google-style indexing supports quick traceability.

Topic 3: Evaluate effectiveness by tracking time-to-verify, error rates, and cycle time across chains of custody spanning producers, packers, shippers, and retailers. Over years, this data-driven approach yields consistent reductions in waste and recalls. Intended metrics include cost per unit, return rates, and customer trust. Difficult contexts like diverse regulatory regimes demand interoperable records and standardized certificates.

Topic 4: Governance design engages author and creators alongside retailers, auditors, and regulators; set identical access levels, responsibilities, and data-retention policies to keep same standards across jurisdictions. Bank-grade controls plus a conceptual risk framework ensures compliance across markets. Future work should align with industry groups and standards bodies; previously established norms provide relevance for implementation. Automated auditing routines and modular legos-constructed workflows ensure compliance and scalability, while open channels support fast adaptation.

End-to-End Traceability with Blockchain and IoT

Recommendation: Implement a sensor-linked, batch-level logging scheme using a trusted ledger to capture every event from origin to retailer, ensuring data cannot be altered without detection and maintaining calibration records for safety-related steps.

Begin with a minimal viable architecture: attach RFID or QR to each pallet, deploy temperature and humidity probes, use GPS for movement, and push data to edge gateways that perform initial checks before honest compilation into ledger entries.

Results from pilot programs show that linking sensor data with certificate metadata reduces misrepresentation risk, improves recall readiness, and elevates customer trust. In covid-19 era analyses, immediate visibility lowered lost stock incidents by 20–35% in tested routes.

Degree of improvement depends on data quality; key factors include device calibration, time synchronization, and data compilation frequency. Achieved results rely on consistent 3–5 seconds for append operations at gateway, with tamper-evident seals for packaging events.

In practice, never rely on a single source; neither supplier nor facility should host all records. Create a distributed set of collectors and verifiers, enabling safety-related checks such as temperature excursions, humidity, and structural integrity. This collection supports market-facing reporting, allowing customers to view lineage, risk flags, and compliance status.

Experience from cases where covid-19 disrupted flows reveals that eliminating data gaps and mislabeling saves time during crises. A well-structured linkage system enables quick investigation into why a batch failed, facilitating targeted corrections rather than broad recalls.

Examples from tian-region networks show measurable benefits: 40–50% reduction in mislabeling, faster response times, and clearer experience for retailers, brokers, and consumers.

Data flow steps: capture > verify > compile > publish; each step adds degree of assurance. If data being collected lacks verifiable origin, risk of misrepresentation increases; thus, designers must embed digital signatures and non-repudiation into event records. In practice, creators of packaging labels must sign certificates to prevent spoofing.

Shown metrics from pilots include 28–44% faster response times, 15–30% lower loss due to damage, and higher confidence among customers.

This approach helps reshape risk management, eliminating data silos, and enabling continuous improvement across market segments.

Automating Recall Procedures via Smart Contracts

Implement automatic recall triggers via self-enforcing agreements linking batch IDs to current state events and transport updates; contracts enforce hold commands across partner networks immediately.

Powerful recall orchestration reduces risk of escalating illnesses.

In essence, centralized, yet interoperable model accelerates containment, reducing losses pronounced when illnesses spread and product touches multiple nodes.

Assessed results from multiple pilots confirm empirical gains: faster isolation, transparent sources, and reduced losses, while maintaining data integrity.

Using nonce values prevents replay and supports auditability; data streams from point of origin to distribution nodes flow with minimal friction.

Provided data feeds from ERP, quality labs, and transport sensors drive decision points; strategies include recall triggers, hold notices, and supplier notifications.

Additionally, also spanning across suppliers, processors, and retailers, this approach yields current-state visibility and dynamic risk scoring.

Point of contact for evaluation rests on characteristics such as traceability, response time, and containment accuracy. Assessed results, current value delivered, and empirical findings guide ongoing tuning of strategies.

| Step | データソース | Trigger | アクション | KPI |

|---|---|---|---|---|

| Ingestion | ERP, LIMS, WMS | state change | store event, compute nonce | latency, accuracy |

| 評価 | sensor streams | illness signal | flag batch, notify parties | recall speed |

| Execution | contract ledger | assessed risk | issue hold, alert sources | reduction in lost items |

| Post-Recall | auditing logs | completion | document traceability | compliance rate |

Provenance Data Standards for Farm-to-Table Transparency

Adopt cross-domain provenance data standards that align source attribution, event encoding, and auditable records across all stages, supported by a published policy offering clarity for participants.

Define a canonical language for event encoding to enable databases and a website to interoperate, with clearly documented schemas and a reference implementation suite.

Since data standards emerged, notable cases demonstrate value from interoperable publishing of provenance events across farm, processing, and retail nodes. Major methodological guidance from bouzdine-chameeva and treiblmaier informs practical rules for publishing provenance data, retention policy, and access control, enabling risk reduction and accountability.

Policy should specify a canonical language for event encoding, provide reference implementations in multiple languages, publish these artifacts on a central website, and define publishing mechanisms to minimize interpretation gaps and data misalignment.

Finally, build intelligence-driven verification, reduction of errors, and automation via robotics at origin points to strengthen capability for source validation and tamper resistance, while supporting auditing trails.

Real-Time Cold-Chain Monitoring on a Shared Ledger

Deploy IoT sensors across every leg and feed readings into a shared ledger with automatically generated proofs; configure seamless ingestion, time-stamped events, and threshold alerts to respond within minutes.

This setup addresses a core challenge: data integrity across multiple players. Use a permissioned mechanism with role-based access, cryptographic signatures, and consensus checks to curb tampering and reduce vulnerability. Trusted participants autosign heat- or cold-stress alerts, creating an auditable trail that discourages fraud and preserves reputation.

Estimated improvements include 15–25% waste reduction from temperature excursions, 30–40% faster recalls, and better outcomes for customer with verifiable provenance. Advancements in sensor accuracy, data normalization, and cross-partner interoperability reduce complexities as data flows from source to end-user with minimal latency, enabling seamless decision-making. Shiny dashboards translate metrics into actionable insights.

Imagine a scenario where a single excursion triggers automatic hold-and-inspect workflows; if a sensor reports out-of-range conditions, shipments are quarantined and routing is adjusted, downstream partners receive trusted alerts, and tamper-resistant logs provide proof, showing previously hidden vulnerabilities addressed and outcomes confirmed.

To begin, run a controlled pilot in select corridors with 3–5 partners; define data schema and a privacy-first sharing rule; pick a trusted provider with clear security posture; monitor signaled events and iterate on data quality. Focus on reducing vulnerability, such as sensor calibration drift, device outages, and manual data entry, by establishing rapid incident response and continuous improvements. This shifts focus from traditional checks to things that once caused delays. This approach comes with measurable benefits.

Cross-Border Interoperability Between Suppliers and Retailers

Adopt cross-border data exchange with blockchain-gs1 to enable real-time visibility across distributors and retailers. This shift yields extensive transparency, reduces fragmented information silos, and fosters coherent collaboration among involved parties. This approach yields real advantages in speed and accuracy.

Defining common data elements using GS1 standards aligns identifiers, lot numbers, expiry dates, and location events across borders. Pair data with event timestamps to support accurate recalls and fast store-level responses. Efficient data processing through streaming validation reduces latency.

Onboard ones among distributors, retailers, carriers, regulators as party participants with role-based access to information, enabling trusted sharing without exposing sensitive details.

Absence of interoperable data addressed by establishing a shared data layer, enabling coherent queries, automated exception handling, and more reliable performance across markets. Addressing absence through a standards-based approach ensures scalability.

Safeguarding sensitive details through permissioned access, encryption, and immutable logs; evidence from pilots shows improved incident response, traceability, and accountability. Insight from field tests helps tailor access models and data views to regional needs.

emergence of cross-border standards reduces manual checks and accelerates onboarding of new partners. Implement this framework using a three-phase path: governance set-up, pilot with selected partners, scale-up. Monitor key indicators such as cycle time, error rate, and cost per item to verify efficiency gains and justify broader usage.

Evidence-based recommendations for policy alignment: adopt privacy-preserving data sharing, ensure consumer-facing safeguards, and publish standardized KPIs. sustainable gains require governance that aligns incentives. Sustainably operate across corridors powered by innovative, interoperable systems to address long-term needs.

Each item movement is stored as a chain of events in blockchain-gs1, allowing store managers to verify product origin and status at any stage.

Data Privacy, Compliance, and Auditability in Blockchain Food Systems

Recommendation: Implement privacy-preserving data sharing with consent-driven access, off-ledger storage for sensitive records, and immutable audit trails on distributed ledger; these measures allow verification while keeping data protected.

- プライバシーアーキテクチャ:ゼロ知識証明を採用して、機密情報を公開することなく属性を検証する。ペルソナ名を付ける;収集するデータの最小化;改ざん防止ログで同意をキャプチャする;ダッシュボードは、権限、ステータス、および日々および状況にわたる関連アクティビティを示す、輝くビジュアルを提供する;このアプローチは、信頼性を高め、消費者を保護する;研究によると、安全に関連するデータが生産および流通中に保護されている場合、リスクは低下する。

- コンプライアンスマッピング: GDPR、CCPA、およびセクター固有のガイドラインに準拠します。明確なデータ主体者の権利ワークフローを定義します。自動化されたポリシーチェックを実装します。規制当局に対し、遵守を証明する証拠の記録を維持します。コンプライアンスのKaren氏によると、国境を越えたデータフローにおけるベストプラクティスです。現在のプラクティスは、継続的な監視の数日でスムーズな監査を示しています。

- 監査可能性と検証:改ざん防止、不変のログを保存する;社内チームおよび外部監査人向けの自動レポートを生成する;検証済みの属性を詳細を公開せずに明らかにする、消費者に公開する透明性ポータルを提供する;逆に、データの分離に失敗するとリスクが高まる;安全なインターフェイスと制御されたデータ共有を強調する;プラットフォーム運用チームは、ロールベースのビュー経由で必要な情報にアクセスし、人間のレビュー担当者がフラグが立てられた異常値をレビューする。

- 人々、プロセス、リスク管理:部門を横断してデータスチュワードを割り当てます。プライバシー、セキュリティ、コンプライアンス研修を実施します。データハンドリングの変換を測定可能で監査可能なステップに適用します。継続的な調査では、意思決定ポイントで人間が関与している場合に最適な結果が得られることが示されています。現在、リスクレビューサイクルは、本番チェックと並行して実行されています。カレンの事例は、ルーチン監査と明確なエスカレーションパスの重要性を強調しています。

インタビューで、カレンは、プライバシーコントロールが日常業務に表示される際に、最前線で働くスタッフからの好意的な反応があることを指摘している。

ブロックチェーンが食品サプライチェーンに革命を起こしている方法">

ブロックチェーンが食品サプライチェーンに革命を起こしている方法">