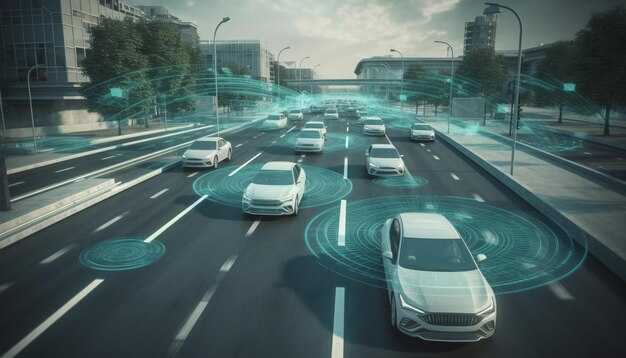

Deploy an integrated sensor-fusion stack that pairs Autoliv radars and occupant sensors with volvo vehicle interfaces and NVIDIA Drive compute to enable real-time perception and materially reduce driver interventions. Set clear KPIs: target a 30–50% drop in manual takeovers in urban zones, cut false positives by roughly 25%, and keep end-to-end latency below 80 ms. Use todays production connectors and standardized telemetry so teams can compare performance points across hardware revisions and software builds.

Collect a minimum of 2,000 hours of labeled driving per market, prioritize scenario points such as intersection merges, pedestrian crossings and speeding zones, and maintain dense annotation for lane and object tracks. Store backward replay (назад) for edge-case analysis, aggregate metrics daily, and produce actionable dashboards that show what incidents drive interventions. Weight each track by exposure and severity so engineers see the exact amount of data needed to reproduce failures and validate fixes.

Prioritize safety validation across simulation, closed-course drive tests and limited public pilots. Instrument real-time monitoring to flag speeding events and geofenced zones, correlate tracking telemetry with customer feedback and sales uplift, and report the aggregate amount of near-miss events per 10,000 km. Share concise reports with product, regulatory and sales teams so they know what changes reduce risk and increase adoption, iterate on nightly tracks that reproduce high-severity incidents, and remove other noise before fleet-wide rollout.

Project scope, responsibilities and delivery milestones

Begin with a focused 12-month pilot: deliver Level 3 urban/highway functionality to 150 cars, target perception latency ≤10 ms, detection range ≥150 m, and demonstrate a 20% improved occupant safety metric versus the baseline fleet within 12 months.

Overview: define what success looks like across three pillars – safety, performance and production readiness – and map functionality to measurable KPIs for both brands (Autoliv-integrated safety hardware and Volvo Cars vehicle platforms) using the zenuity stack and NVIDIA compute.

- Scope (what we will deliver)

- Perception suite (lidar, radar, camera fusion) with 150 m range and validated false-positive ≤0.1% on target classes.

- Real-time planning and control stack with 10 ms decision latency and 99.9% availability within operating conditions defined in the SOTIF annex.

- Over-the-air update pipeline, data lake, and actionable dashboards so youll access curated incident reports and metrics at your fingertips within 48 hours of collecting new data.

- Data targets

- Collect 1.5M km of real-world driving (60% urban, 40% highway) and generate 50M simulation-equivalent km for validation.

- Stream prioritized events in real-time for scenario replay (target 2,000 high-severity events/month).

Responsibilities (clear owner per deliverable):

- Autoliv – lead vehicle occupant safety integration, sensor hardware validation, and production-grade fail-safe actuation; deliver hardware specs, certification report and MIL-STD shock/vibration tests by M6.

- Volvo Cars – vehicle integration, fleet logistics, safety case assembly and regulatory submissions; provide 150 test cars, chassis interfaces and in-vehicle telemetry by M1.

- NVIDIA – provide DRIVE compute platform, SDK support, optimization for energy and latency, plus reference neural network models and toolchain; deliver optimized model runtimes and a dev kit by M3.

- zenuity – supply core autonomy software modules, perception training pipelines, and scenario-based validation suites; operate model retraining cadence and release candidate builds.

- Program governance – assign one program manager to coordinate sprints, integrate CI results across teams, and host weekly technical reviews to resolve cross-brand blockers.

Milestones (concrete delivery dates and acceptance criteria):

- M0–M1: hardware delivery and vehicle fitment complete; baseline telemetry enabled on all cars; acceptance: 150 cars reporting heartbeat and 0 packet loss >1 minute.

- M2–M3: first integrated stack on 30 pilot cars; closed-loop simulation pass (10M km equiv.); acceptance: perception precision ≥92% on target classes and control loop <10 ms.

- M4–M6: scale to 75 cars, execute 500k km real-world drive; safety case draft with preliminary hazard analysis; acceptance: scenario coverage ≥70% of defined use cases and energy budget ≤70% of thermal headroom.

- M7–M9: full-stack optimization, retrain deep models with collected data, run 20M simulation km for edge cases; acceptance: repeated regression tests pass 30 consecutive nightly CI cycles.

- M10–M12: fleet at 150 cars, validate production release candidate, submit regulatory dossiers; acceptance: final safety metrics met (20% improved occupant safety score, 95% scenario coverage) and go/no-go for limited commercial rollout.

Algorithms and validation cadence:

- Operate a weekly training pipeline for data curation, with deep model fine-tuning every two weeks and hotfix deployments within 72 hours for safety-critical regressions.

- Maintain a continuous validation grid: 50 automated tests per commit, 24/7 regression farms and a manual review queue for edge cases that takes ≤48 hours to triage.

Metrics you should track (most actionable KPIs):

- Perception: precision/recall per object class, mean detection range, and false-positive rate.

- Latency: perception-to-actuation pipeline ≤10 ms median, 99th percentile ≤25 ms.

- Reliability: uptime >99.9% and incident rate ≤0.02 per 10k km.

- Validation depth: simulation coverage vs real-world coverage ratio and number of unique edge cases discovered per 100k km.

Expected achievements by project end: improved model accuracy by 15–25%, production-grade integration across both brands, and a validated safety case that allows features to become available to regulated pilots. Assign clear owners, enforce the milestone calendar, and take weekly action items to keep delivery on schedule.

Assigned roles for Autoliv, Volvo Cars and NVIDIA in sensors, software stacks and vehicle integration

Assign Autoliv to own sensor hardware and data-collection pipelines, Volvo Cars to own vehicle integration and operator workflows, and NVIDIA to own perception/decision software stacks and high-performance compute – this split best reduces overlap, clarifies who will train models and who will validate results.

Autoliv: lead sensor specification, manufacturing and on-vehicle calibration. Define sensor types (lidar, radar, stereo vision, ultrasonic) with concrete KPIs: lidar range ≥200 m at 10% reflectivity, radar false alarm rate ≤0.5/km, camera dynamic range ≥140 dB. Autoliv must manage synchronized timestamping, microsecond-level clocking, and lossless metadata collection thats necessary for model training. Require sensor-in-the-loop testing across a variety of environments and zones (urban, highway, low-light), run weekly regression suites, and publish defect-cause reports to reduce repeated failures. Provide operators with a real-time sensor health view and automated alerts when connectivity or calibration drifts exceed thresholds that impact perception speed or accuracy.

Volvo Cars: integrate sensors into vehicle electrical and mechanical systems, own actuator interfaces, safety controllers and operator HMIs. Define the AEMP-compatible (aemp) interface to share vehicle state and enable safe remote takeover. Specify end-to-end latency budgets: perception-to-actuation ≤100 ms for primary maneuvers, braking chain meeting ISO 26262 ASIL D. Volvo should implement geofenced operational zones, map management, and onboard data collection policies; decide whether to stream raw data or edge-filtered telemetry based on connectivity costs. Ship vehicle test fleets with redundant power and logging capacity to support long-term collection for offline training and on-road testing of new software stacks.

NVIDIA: deliver the core software stack (sensor fusion, perception, prediction, planning, and control) optimized for Drive compute platforms. Provide reference neural-network architectures, training recipes, and validation datasets so the company partners can reproduce results and train on shared collections. Ensure software supports distributed training, offers reproducible metrics for accuracy, latency, throughput and model size, and supplies tooling that empowers operators and engineers to inspect model decision points and gather ML insights. Implement closed-loop testing harnesses that run NVIDIA stacks in hardware-in-the-loop, collect failure cases, and return prioritized tickets to Autoliv and Volvo Cars for targeted improvements.

Operational recommendations: establish a joint governance board to share test results, assign sprint owners per subsystem, and run monthly cross-company reviews focused on productivity metrics (test coverage, mean time to repair, model convergence speed). Use a common data schema for collection so teams can share labels, scenarios and edge cases without repeated reformatting. Define clear ownership for cause-analysis: sensor anomalies go to Autoliv, integration bugs to Volvo Cars, model regressions to NVIDIA. That separation shortens debug cycles and improves time-to-fix.

| Şirket | Primary scope | Key deliverables | Performance KPIs |

|---|---|---|---|

| Autoliv | Sensors, calibration, data collection | Sensor modules, calibration tools, field collection rigs | Lidar range ≥200 m; timestamp jitter ≤1 µs; false alarm ≤0.5/km |

| Volvo Cars | Vehicle integration, operator interfaces, safety | AEMP integration, actuator interfaces, geofence management | Perception→actuation latency ≤100 ms; ASIL D compliance; zone-defined fail-safe |

| NVIDIA | Software stacks, training infra, runtime compute | Perception/planning stacks, training pipelines, validation suites | End-to-end throughput ≥30 fps; detection AP targets; model retrain cadence |

Measure integration success by combined metrics: system-level false positive/negative rates, fleet mean distance between failures, and percentage of edge cases closed per quarter. Shared connectivity and standardized collection accelerate training and improve visibility into why certain scenarios cause failures. Final ownership depends on the right allocation of resources: sensor robustness were validated by Autoliv, vehicle safety by Volvo Cars, and model performance by NVIDIA – keep that split and you drive faster progress across the industry.

Sensor fusion architecture: selecting radar, lidar and camera mixes and redundancy targets

Deploy triple-modality redundancy across the forward driving corridor: two long-range radars (200–250 m), two forward-facing cameras with overlapping fields of view (120–200 m effective), and one long-range lidar (120–200 m); ensure every object in the stopping envelope is visible to at least two modalities.

-

Recommended mixes by use case

-

Highway (high-speed, autonomous highway pilot): 2 long-range narrow-azimuth radars (200–250 m), 1 long-range lidar, 4 forward/side high-resolution cameras, 4 short-range radars for close-in detection. Redundancy target: 2 modalities cover 0–250 m front corridor; triple redundancy for 0–80 m.

-

Urban (complex intersections, pedestrians): 2 mid-range lidars (120 m) or 1 lidar + additional short-range radars, 8 cameras for 360° visual coverage with 30–50% overlap per sector, 6 short/mid-range radars. Redundancy target: triple modality for 0–60 m, dual modality to 120 m.

-

Cost-optimized production (L3 features, limited ADAS): omit long-range lidar, use 6–8 cameras and 6 radars with higher processing for radar-camera fusion. Redundancy target: dual modality across critical zones; add a single lidar for higher trims.

-

-

Sensor roles and physical placement

- Long-range radars: narrow azimuth (10–25°) on vehicle centerline to track speed and range of distant vehicles; will handle initial threat cueing.

- Mid/short-range radars: wide azimuth (60–140°) in bumpers and corners for blind-spot and close obstruction tracking; place to give overlapping coverage in corners.

- Cameras: high dynamic range (120–200 m effective) for classification and lane/traffic sign vision; pair front cameras with 20–40% pixel overlap and staggered exposures for HDR.

- Lidar: use 120–200 m units for object shape and precise range/height; mount to minimize physical occlusion and heat flux.

-

Concrete performance and calibration targets

- End-to-end perception latency: perception pipeline <50 ms; planning-ready tracks <100 ms.

- Time sync: timestamp alignment <5 ms across sensors.

- Spatial calibration: extrinsic error <5 cm and angular error <0.5° for camera-lidar fusion.

- Detection goal: reliably detect objects >0.3 m at 60 m in urban and >150 m on highways under nominal conditions (actual reliability depends on sensor mix and weather).

- Tracking: maintain continuous track identity through occlusion windows up to 2 s using fused points and vision features.

-

Fusion architecture and data flow

- Pre-fusion layer: synchronous stamping, ego-motion compensation, and raw data filtering (radar point clusters, lidar point clouds, camera rectified frames).

- Low-level fusion: project lidar points and radar clusters into camera image space for early association; apply depth-aided segmentation to reduce false positives.

- Tracker layer: multi-hypothesis Kalman/PMBM trackers that ingest modality-specific detections and output fused tracks with modality confidence scores.

- Decision layer: rule- and ML-based risk scoring that uses modality agreement as a primary safety weight; require two independent modalities to confirm high-risk targets within stopping distance before braking maneuvers escalate.

-

Redundancy targets expressed simply

- Critical forward corridor (0–150 m): at least 2 independent modalities with independent failure modes.

- Close range (0–60 m): 3-modality coverage where pedestrian and cyclist interactions occur.

- Corner/side zones: dual-modality coverage; add a third sensor for high-risk vehicle variants or retrofit kits.

-

Data strategy, testing and validation

- Collect hundreds of hours and hundreds of thousands of kilometers of labeled runs; youll prioritize edge-case quarrying (near-misses, speeding events, occluded pedestrians) rather than archiving all raw frames to reduce storage costs.

- Run at least 1–5 million scenario simulations augmenting real-world logs; validate sensor fusion against both Zenuity-style pipelines and corporation safety baselines.

- Use synchronized visualization dashboards at your fingertips for rapid triage and to share labeled incidents across teams; ensure labels include modality confidence and track-level provenance.

-

Cost and operational trade-offs

- Lidar remains costly; deploy in solutions that will benefit from point accuracy (urban curb detection, low-speed pedestrian protection). If budget constrains, increase radar density and camera overlap but accept reduced object-shape fidelity.

- Compute and bandwidth scale with camera resolution and lidar point density; budget processing headroom to avoid costly overflows during peak events (sudden quarrying of data during incidents).

-

Safety and regression criteria

- Design to detect and track objects that could lead to injuries in emergency braking scenarios; require independent confirmation from a secondary modality before suppressing braking commands.

- Maintain logs that let you share perception failure points with suppliers and regulators; almost all meaningful fixes will come from correlating simulation failures with real-world collected evidence.

Follow these prescriptions and youll deliver a fusion stack that balances vision, radar and lidar strengths, keeps costly sensors where they add unique value, and provides measurable targets for calibration, latency and redundancy that support safe autonomous operation in production cars.

Data pipeline requirements: on-road data capture, annotation standards and shared dataset governance

Mandate synchronized multi-sensor capture with per-frame timestamp accuracy ≤1 ms jitter, GNSS+RTK position accuracy ≤10 cm, IMU ≥200 Hz, LiDAR 10–20 Hz and cameras 60 fps; work with OEM fleets (for example ex90) to instrument vehicles and log raw streams plus calibrated extrinsics for each sensor so that every event can be reconstructed deterministically.

Provide explicit capture parameters per site: minimum 1,000 drive hours or 50,000 km collected per geographic site, stratified to include at least 100k daytime intersections, 50k night intersections, 30k rain segments and 10k snow segments. Collecting to these targets reduces class imbalance and gives models enough rare-event examples such as speeding, emergency braking and complex merges.

Define annotation standards that require 3D bounding boxes with temporal object IDs, per-frame occlusion and truncation flags, behavior labels (e.g., pedestrian intent, lane-change start/finish), and sensor-projection metadata. Specify annotation accuracy targets: inter-annotator IoU ≥0.80 for 3D boxes, consensus agreement ≥95% on safety-critical labels, and label latency ≤72 hours for safety-relevant clips so training pipelines receive timely updates.

Use a layered QA pipeline: automated validators (range checks, timestamp continuity, reprojection error thresholds ≤5 cm), golden-set spot checks (sample 1% of frames per batch), and blind re-annotation on 2% of samples. Capture annotation provenance (annotator ID, tool version, correction history) and expose it through metadata so teams can trace why a model made a particular decision and answer audit queries.

Run edge preprocessing on-vehicle to reduce bandwidth and protect privacy: perform on-device anonymization (face and plate redaction), compute pre-filter scores for events of interest, and upload encrypted snippets for human review. When connectivity drops, buffer hashed manifests and only transmit signed payloads once connectivity and regulatory checks pass.

Align retention, sharing and governance with regulations such as GDPR and UNECE WP.29: store raw-identifiable streams for a defined retention window (e.g., 24 months) and retain de-identified training derivatives longer with documented legal basis. Provide per-record consent logs, geofence-based access controls, and jurisdictional policy flags so a dataset consumer or regulator can see which records are restricted.

Establish a shared dataset governance model: a cross-company steering group that includes safety engineers, privacy officers, customer representatives and external regulators; a dataset steward role that enforces schema changes; and versioned releases with semantic versioning for labels and splits. Publish dataset manifests, checksums and change logs to a secure marketplace API so partners can discover capabilities and download only approved bundles.

Measure operational KPIs: track collection throughput (GB/hour), lab throughput (frames/hour/annotator), annotation error rate, and model improvement per release (mAP deltas, false positive per km). Tie these KPIs to deployment gates – do not advance a model beyond closed-track validation until real-world false positive rate drops below target for safety-critical classes and customer-facing metrics on the vehicle remain within SLA.

Make visualization and tooling available at stakeholders’ fingertips: interactive replay with sensor fusion layers, label timelines, and automated root-cause markers to speed debugging. Empowering teams with these tools will fuel faster iterations for artificial intelligence models and provide the concrete evidence regulators and society expect for safe operation.

Govern shared datasets pragmatically: require license metadata for each release, tiered access for research and commercial use, cryptographic provenance for every file, and a dispute-resolution workflow so annotator disagreements have a clear answer. This approach keeps the marketplace auditable, protects customer privacy, and helps the entire industry take the next leap beyond siloed data efforts.

NVIDIA Drive deployment plan: compute sizing, software stack versioning and over‑the‑air update process

Allocate compute per vehicle and reserve headroom: L2+ = 100–200 TOPS, L3 = 200–400 TOPS, L4 = 600–1,200 TOPS, with a 30–50% safety margin for future model growth; prefer Drive Orin/Thor class modules and size aggregate TOPS to include perception, localization, planning and redundancy.

Budget compute by function: camera perception 15–30 TOPS per 1080p stream, lidar perception 80–200 TOPS depending on point density, radar signal processing 5–20 TOPS, localization/fusion 20–50 TOPS, motion planning 30–100 TOPS. Sum these into a per-vehicle aggregate, run each pipeline at target rates (perception 30–60 Hz, localization 20–60 Hz, planning 10–50 Hz) and verify end‑to‑end latency (95th percentile <100 ms for perception-to-actuation on L3, <30 ms for L2 emergency interventions) to achieve the very low response times needed for automotive safety.

Use strict versioning: adopt MAJOR.MINOR.PATCH-hwSKU for the vehicle-level stack and version each module (perception, prediction, planning, control, security) independently. Map versions in a compatibility matrix that ties software MAJOR to ABI changes and MINOR to model updates; keep deterministic builds and signed SBOMs per image. Upon any software release, management tooling must generate a clear roll‑forward and roll‑back plan, including test vectors and pass/fail thresholds for each function.

Design the OTA pipeline with safety and efficiency in mind: dual A/B partitions with atomic switch, signed images validated by HSM/secure boot, delta compression (zstd) for payloads, and differential model updates so you only transfer changed weights. Start rollouts as canaries (1% of fleet), measure telemetry for 48–72 hours, then ramp to 10% and 50% before full deployment. Define automatic rollback triggers (excess CPU, increased inference errors, missed heartbeats) that revert to the last known-good partition upon failure; tracking telemetry and error results in real time lets you contain regressions before they reach critical vehicles.

When the vehicle is connected, prefetch updates based on predicted connectivity windows and apply during low‑usage periods; when bandwidth is limited, push only critical patches and model deltas to preserve speed. Integrate OTA with fleet services for scheduling, certificate rotation and audit logs so operators can visit a single console to inspect status. Use edge orchestration to bring new models into the vehicle sandbox, run staged validation locally, then promote to mainline functions if tests pass.

Operationalize deployment with phased pilots: started pilots of 5–10 vehicles for three months, extend to 50 vehicles for six months, then roll out fleetwide. Track KPIs (latency, hazard rate, rollback frequency, update time) and feed results back into CI pipelines so artificial intelligence model updates go through the same gate as control software. Believe that disciplined sizing, clear versioning and an OTA process built around signed, atomic updates and tight telemetry will provide efficient, safe updates for todays vehicles and the future of automated driving.

Safety verification strategy: scenario creation, test matrix, pass/fail criteria and regulatory evidence

Execute a verification campaign that combines 10 million simulated kilometers and 100,000 real-world kilometers within 12 months, covering 1,500 base scenarios expanded to 150,000 parameterized variations; youll allocate compute, instrumentation and field teams to meet that cadence.

Create scenarios from three sources: fleet telemetry, targeted fault injection and synthetic generators. Start by collecting 200,000 edge events from naturalistic driving, labelling and annotating them to extract root cause signatures; then create hundreds of parameter sweeps per signature (speed, lateral offset, sensor occlusion, lighting, pedestrian intent) so this solution covers both common and rare causes without gaps.

Define a test matrix that crosses scenario families (intersection, lane change, cut-in, pedestrian crossing, sensor-failure) with operational axes: speed bands (0–30, 30–60, 60–120 km/h), road type (urban, suburban, highway), weather (clear, rain, snow, low sun), sensor degradation levels and system modes. Build an aggregate matrix of ~1.5M cells and use dashboard visualization to track pass rates by cell and ODD positioning; adopt forward-looking cells that model future traffic mixes (e-scooters, automated delivery bots).

Set measurable pass/fail criteria: zero high-severity collisions with VRUs across full verification runs, intervention rate ≤0.1 per 1,000 km in real-world validation, probability of run-in collision ≤1×10⁻⁷ per km estimated from aggregated simulation+field evidence, and 95% confidence intervals for each metric. For perception, require true positive rate ≥99% for critical object classes at 30m and false positive rate ≤0.5/km in urban scenarios. Define tolerated root-cause classes and require mitigations that demonstrably remove the cause from repeated trials.

Operationalize failure management: automatically log every failed cell with deterministic replay, signal causal triggers, and require a documented fix plus regression sub-test that reduces failure recurrence below threshold before closure. Keep a running list of events and their fixes, and ensure analysising of failure modes feeds scenario generation so you take corrective coverage rather than repeating tests without change.

Package regulatory evidence as an auditable bundle: scenario definitions (machine-readable), raw and processed logs, metric computation scripts, statistical reports, traceability matrix linking requirements to tests, and signed executive summaries. Include an executive note for the safety board and the program president that highlights aggregate exposure, residual risks and planned reductions; companies will append legal attestations and independent third-party reports where required.

Use continuous monitoring: replay fleet events into the simulator daily, aggregate new edge cases into a candidate pool, and push prioritized items into the active test matrix every sprint. Maintain visualization that shows coverage gaps and a forward-looking roadmap so teams stay aligned with the leader-level safety plan and future regulatory expectations.

Deliverables checklist: 1) scenario taxonomy and parameters, 2) test matrix with 1.5M cells and pass/fail thresholds, 3) aggregate statistical evidence from 10M sim km + 100k real km, 4) deterministic replay artifacts for each failure, 5) mitigation records and regression proofs, and 6) signed summaries for regulators; this will fast-track approvals and keep the drive toward a demonstrably safe product.